Study design features increase replicability in brain-wide association studies

Study design features increase replicability in brain-wide association studies"

- Select a language for the TTS:

- UK English Female

- UK English Male

- US English Female

- US English Male

- Australian Female

- Australian Male

- Language selected: (auto detect) - EN

Play all audios:

ABSTRACT Brain-wide association studies (BWAS) are a fundamental tool in discovering brain–behaviour associations1,2. Several recent studies have shown that thousands of study participants

are required for good replicability of BWAS1,2,3. Here we performed analyses and meta-analyses of a robust effect size index using 63 longitudinal and cross-sectional MRI studies from the

Lifespan Brain Chart Consortium4 (77,695 total scans) to demonstrate that optimizing study design is critical for increasing standardized effect sizes and replicability in BWAS. A

meta-analysis of brain volume associations with age indicates that BWAS with larger variability of the covariate and longitudinal studies have larger reported standardized effect size.

Analysing age effects on global and regional brain measures from the UK Biobank and the Alzheimer’s Disease Neuroimaging Initiative, we showed that modifying study design through sampling

schemes improves standardized effect sizes and replicability. To ensure that our results are generalizable, we further evaluated the longitudinal sampling schemes on cognitive,

psychopathology and demographic associations with structural and functional brain outcome measures in the Adolescent Brain and Cognitive Development dataset. We demonstrated that commonly

used longitudinal models, which assume equal between-subject and within-subject changes can, counterintuitively, reduce standardized effect sizes and replicability. Explicitly modelling the

between-subject and within-subject effects avoids conflating them and enables optimizing the standardized effect sizes for each separately. Together, these results provide guidance for study

designs that improve the replicability of BWAS. SIMILAR CONTENT BEING VIEWED BY OTHERS REPLICABLE BRAIN–PHENOTYPE ASSOCIATIONS REQUIRE LARGE-SCALE NEUROIMAGING DATA Article 26 June 2023

REPRODUCIBLE BRAIN-WIDE ASSOCIATION STUDIES REQUIRE THOUSANDS OF INDIVIDUALS Article Open access 16 March 2022 BRAIN AGEING IN SCHIZOPHRENIA: EVIDENCE FROM 26 INTERNATIONAL COHORTS VIA THE

ENIGMA SCHIZOPHRENIA CONSORTIUM Article Open access 09 December 2022 MAIN BWAS use non-invasive MRI to identify associations between inter-individual differences in behaviour, cognition,

biological or clinical measurements and brain structure or function1,2. A fundamental goal of BWAS is to identify true underlying biological associations that improve our understanding of

how brain organization and function are linked to health across the lifespan. Recent studies have raised concerns about the replicability of BWAS1,2,3. Statistical replicability is typically

defined as the probability of obtaining consistent results from hypothesis tests across different studies. Like statistical power, replicability is a function of both the standardized

effect size and the sample size5,6,7. Low replicability in BWAS has been attributed to a combination of small sample sizes, small standardized effect sizes and bad research practices (such

as _p_-hacking and publication bias)1,2,8,9,10,11,12. The most obvious solution to increase the replicability in BWAS is to increase study sample sizes. Several recent studies have shown

that thousands of study participants are required to obtain replicable findings in BWAS1,2. However, massive sample sizes are often infeasible in practice. Standardized effect sizes (such as

Pearson’s correlation and Cohen’s _d_) are statistical values that not only depend on the underlying biological association in the population but also on the study design. Two studies of

the same biological effect with different study designs will have different standardized effect sizes. For example, contrasting brain function of groups with depression versus those without

depression will have a different Cohen’s _d_ effect size if the study design measures more extreme depressed states contemporaneously with measures of brain function, as opposed to less

extreme depressed states, even if the underlying biological effect is the same. Although researchers cannot increase the magnitude of the underlying biological association, its standardized

effect size — and thus its replicability — can be increased by critical features of study design. In this study, we focus on identifying modifiable study design features that can be used to

improve the replicability of BWAS by increasing standardized effect sizes. Increasing standardized effect sizes through study design before data collection stands in contrast to bad research

practices that can artificially inflate reported effect sizes, such as _p_-hacking and publication bias. There has been very little research regarding how modifications to the study design

might improve BWAS replicability. Specifically, we focus on two major design features that directly influence standardized effect sizes: variation in sampling scheme and longitudinal

designs1,13,14,15. Of note, these design features can be implemented without inflating the sample estimate of the underlying biological effect when using correctly specified models16. By

increasing the replicability of BWAS through study design, we can more efficiently utilize the US$1.8 billion average annual investment in neuroimaging research from the US National

Institutes of Health (https://reporter.nih.gov/search/_dNnH1VaiEKU_vZLZ7L2xw/projects/charts). Here we conducted a comprehensive investigation of cross-sectional and longitudinal BWAS

designs by capitalizing on multiple large-scale data resources. Specifically, we begin by analysing and meta-analysing 63 neuroimaging datasets including 77,695 scans from 60,900 cognitively

normal participants from the Lifespan Brain Chart Consortium4 (LBCC). We leverage data from the UK Biobank (UKB; up to 29,031 scans), the Alzheimer’s Disease Neuroimaging Initiative (ADNI;

2,232 scans) and the Adolescent Brain Cognitive Development study (ABCD; up to 17,210 scans) to investigate the most commonly measured phenotypes of brain structure and function. To ensure

that our results are broadly generalizable, we evaluated associations with diverse covariates of interest, including age, sex, cognition and psychopathology. To facilitate comparison between

BWAS designs, we also introduce a new version of the robust effect size index (RESI)17,18,19 that allows us to demonstrate how longitudinal study design directly impacts standardized effect

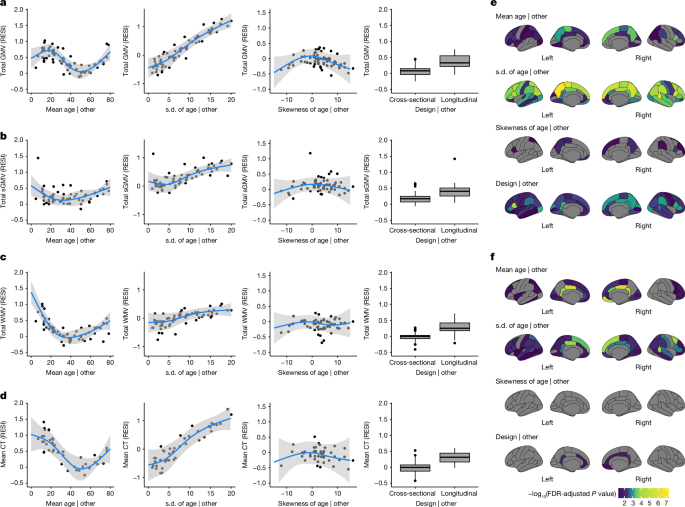

sizes. STANDARDIZED EFFECT SIZES DEPEND ON STUDY DESIGN To fit each study-level analysis, we regressed each of the global brain measures (total grey matter volume (GMV), total subcortical

grey matter volume (sGMV), total white matter volume (WMV) and mean cortical thickness) and regional brain measures (regional GMV and cortical thickness, based on Desikan–Killiany

parcellation20) on sex and age in each of the 63 neuroimaging datasets from the LBCC. Age was modelled using a non-linear spline function in linear regression models for the cross-sectional

datasets and generalized estimating equations (GEEs) for the longitudinal datasets (Methods). Site effects were removed before the regressions using ComBat21,22 (Methods). Analyses for total

GMV, total sGMV and total WMV used all 63 neuroimaging datasets (16 longitudinal; Supplementary Table 1). Analyses of regional brain volumes and cortical thickness used 43 neuroimaging

datasets (13 longitudinal; Methods and Supplementary Table 2). Throughout the present study, we used the RESI17,18,19 as a measure of standardized effect size. The RESI is a recently

developed index that is equal to 1/2 Cohen’s _d_ under the same assumptions for Cohen’s _d_17 (Methods; section 3 in Supplementary Information). We used the RESI as a standardized effect

size because it is broadly applicable to many types of models and is robust to model misspecification. To investigate the effects of study design features on the RESI, we performed

meta-analyses for the four global brain measures and two regional brain measures in the LBCC to model the association of study-level design features with standardized effect sizes. Study

design features are quantified as the sample mean, standard deviation and skewness of the age covariate as non-linear terms, and a binary variable indicating the design type (cross-sectional

or longitudinal). After obtaining the estimates of the standardized effect sizes of age and sex in each analysis of the global and regional brain measures, we conducted meta-analyses of the

estimated standardized effect sizes using weighted linear regression models with study design features as covariates (Methods). For total GMV, the partial regression plots of the effect of

each study design feature demonstrate a strong cubic-shape relationship between the standardized effect size for total GMV–age association and study population mean age. This cubic shape

indicates that the strength of the age effect varies with respect to the age of the population being studied. The largest age effect on total GMV in the human lifespan occurs during early

and late adulthood (Fig. 1a and Supplementary Table 3). There is also a strong positive linear effect of the study population standard deviation of age and the standardized effect size for

total GMV–age association. For each unit increase in the standard deviation of age (in years), the expected standardized effect size increases by about 0.1 (Fig. 1a). This aligns with the

well-known relationship between correlation strength and covariate standard deviation indicated by statistical principles23. Plots for total sGMV, total WMV and mean cortical thickness show

U-shaped changes of the age effect with respect to the study population mean age (Fig. 1b–d). A similar but sometimes weaker relationship is shown between expected standardized effect size

and study population standard deviation and skewness of the age covariate (Fig. 1b–d and Supplementary Tables 4–6). Finally, the meta-analyses also show a moderate effect of study design on

the standardized effect size of age on each of the global brain measures (Fig. 1a–d and Supplementary Tables 3–6). The average standardized effect size for total GMV–age associations in

longitudinal studies (RESI = 0.39) is substantially larger than in cross-sectional studies (RESI = 0.08) after controlling for the study design variables, corresponding to a more than 380%

increase in the standardized effect size for longitudinal studies. This value quantifies the systematic differences in the standardized effect sizes between the cross-sectional and

longitudinal studies among the 63 neuroimaging studies. Of note, longitudinal study design does not improve the standardized effect size for biological sex, because sex does not vary within

participants in these studies (Supplementary Tables 7–10 and Supplementary Fig. 2). For regional GMV and cortical thickness, similar effects of study design features also occur across

regions (Fig. 1e,f; 34 regions per hemisphere). In most of the regions, the standardized effect sizes of age on regional GMV and cortical thickness are strongly associated with the study

population standard deviation of age. Longitudinal study designs generally tend to have a positive effect on the standardized effect sizes for regional GMV–age associations and a positive

but weaker effect on the standardized effect sizes for regional cortical thickness–age associations. To improve the comparability of standardized effect sizes between cross-sectional and

longitudinal studies, we propose a new effect size index: the cross-sectional RESI for longitudinal datasets (section 3 in Supplementary Information). The cross-sectional RESI for

longitudinal datasets represents the RESI in the same study population, if the longitudinal study had been conducted cross-sectionally. This newly developed effect size index allows us to

quantify the benefit of using a longitudinal study design in a single dataset (section 3.3 in Supplementary Information). The meta-analysis results demonstrate that standardized effect sizes

are dependent on study design features, such as mean age, standard deviation of the age of the sample population, and cross-sectional or longitudinal design. Moreover, the results suggest

that modifying study design features, such as increasing variability and conducting longitudinal studies, can increase the standardized effect sizes in BWAS. IMPROVED SAMPLING BOOSTS

REPLICABILITY To investigate the effect of modifying the variability of the age covariate on increasing standardized effect sizes and replicability, we implemented three sampling schemes

that produce different sample standard deviations of the age covariate. We treated the large-scale cross-sectional UKB data as the population and draw samples whose age distributions follow

a pre-specified shape (bell shaped, uniform and U shaped; Methods and Extended Data Fig. 1a). In the UKB, the U-shaped sampling scheme on age increases the standardized effect size for the

total GMV–age association by 60% compared with bell shaped and by 27% compared with uniform (Fig. 2a), with an associated increase in replicability (Fig. 2b). To achieve 80% replicability

for detecting the total GMV–age association (Methods), fewer than 100 participants are sufficient if using the U-shaped sampling scheme, whereas about 200 participants are needed if the

bell-shaped sampling scheme is used (Fig. 2b). A similar pattern can be seen for the regional outcomes of GMV and cortical thickness (Fig. 2c–f). The U-shaped sampling scheme typically

provides the largest standardized effect sizes of age and the highest replicability, followed by the uniform and bell-shaped schemes. The U-shaped sampling scheme shows greater

region-specific improvement in the standardized effect sizes and replicability for regional GMV–age and regional cortical thickness–age associations than the bell-shaped scheme (Extended

Data Fig. 1d,e). To investigate the effect of increasing the variability of the age covariate longitudinally, we implemented sampling schemes to adjust the between-subject and within-subject

variability of age in the bootstrap samples from the longitudinal ADNI dataset. In the bootstrap samples, each participant had two measurements (baseline and a follow-up). To imitate the

true operation of a study, we selected the two measurements of each participant based on baseline age and the follow-up age by targeting specific distributions for the baseline age and the

age change at the follow-up time point (Methods; Extended Data Fig. 1b,c). Increasing between-subject and within-subject variability of age increases the average observed standardized effect

sizes, with corresponding increases in replicability (Fig. 3). A U-shaped between-subject sampling scheme on age increases the standardized effect size for total GMV–age association by

23.6% compared with bell shaped and by 12.1% compared with uniform, when using the uniform within-subject sampling scheme (Fig. 3a). In addition, we investigated the effect of the number of

measurements per participant on the standardized effect size and replicability in longitudinal data using the ADNI dataset. Adding a single additional measurement after the baseline

increases the standardized effect size for total GMV–age association by 156% and replicability by 350%. The benefit of additional measurements is minimal (Fig. 3c,d). Finally, we also

evaluated the effects of the longitudinal sampling schemes on regional GMV and cortical thickness in the ADNI dataset (Fig. 3e–h). When sampling two measurements per participant, the

between-subject and within-subject sampling schemes producing larger age variability increase the standardized effect size and replicability across most regions. Together, these results

suggest that having larger spacing in between-subject and within-subject age measurements increases standardized effect size and replicability. Most of the benefit of the number of

within-subject measurements is due to the first additional measurement after baseline. SAMPLING BENEFIT VARIES BY BRAIN MEASURE As standardized effect sizes for brain–age associations are

often larger than for brain–behaviour associations, we investigated whether the proposed sampling schemes are effective on various non-brain covariates and their associations with structural

and functional brain measures in all participants (with and without neuropsychiatric symptoms) with cross-sectional and longitudinal measurements from the ABCD dataset. The non-brain

covariates include the NIH toolbox24, Child Behavior Checklist (CBCL), body mass index (BMI), birth weight and handedness (Methods; Supplementary Tables 11 and 12). Functional connectivity

is used as a functional brain measure and is computed for all pairs of regions in the Gordon atlas25 (Methods). We used the bell-shaped and U-shaped target sampling distributions to control

the between-subject and within-subject variability of each non-brain covariate (Methods). For each non-brain covariate, we show the results for the four combinations of between-subject and

within-subject sampling schemes. Overall, there is a consistent benefit to increasing between-subject variability of the covariate (Fig. 4 and Extended Data Fig. 2). These preferred sampling

schemes lead to more than 1.8 factor reduction in sample size for 80% replicability and more than 1.4 factor increase in the standardized effect size for over 50% of associations. Moreover,

72% of covariate-outcome associations had increased standardized effect sizes by increasing the between-subject variability of the covariates (Extended Data Fig. 3). Importantly, increasing

within-subject variability decreases the standardized effect sizes for many structural associations (Fig. 4a–f and Extended Data Fig. 2a–f), suggesting that conducting longitudinal analyses

can result in decreased replicability compared with cross-sectional analyses. For the functional connectivity outcomes, there is a slight positive effect of increasing within-subject

variability (Fig. 4g,h and Extended Data Fig. 2g,h). To evaluate the lower replicability of the structural associations with increasing within-subject variability, we compared

cross-sectional standardized effect sizes of the non-brain covariates on each brain measure using the baseline measurements to the standardized effect sizes estimated using the full

longitudinal data (Fig. 5a–d and Extended Data Fig. 4). Consistent with the reduction in standardized effect size by increasing within-subject variability, for most structural associations

(GMV and cortical thickness), conducting cross-sectional analyses using the baseline measurements results in larger standardized effect sizes (and higher replicability) than conducting

analyses using the full longitudinal data. This finding holds when fitting a cross-sectional model only using the 2-year follow-up measurement (Extended Data Fig. 4). Identical results are

found using linear mixed models with individual-specific random intercepts, which are commonly used in BWAS (Supplementary Fig. 3). Together, these results suggest that the benefit of

conducting longitudinal studies and larger within-subject variability is highly dependent on the brain–behaviour association. Counterintuitively, longitudinal designs can reduce the

standardized effect sizes and replicability. ACCURATE LONGITUDINAL MODELS ARE CRUCIAL To investigate why increasing within-subject variability or using longitudinal designs is not beneficial

for some associations, we examined an assumption common to GEEs and linear mixed models in BWAS. These widely used models assume that there is consistent association strength between the

brain measure and non-brain covariate across between-subject and within-subject changes in the non-brain covariate. However, the between-subject and within-subject association strengths can

differ because non-brain measures can be more variable than structural brain measures for various reasons. For example, crystallized composite scores may vary within a participant

longitudinally because of time-of-day effects, lack of sleep or natural noise in the measurement. By contrast, GMV is more precise and it is not vulnerable to other sources of variability

that might accompany the crystallized composite score. This combination leads to a low within-subject association between these variables (Supplementary Table 13). Functional connectivity

measures are more similar to crystallized composite scores in that they are subject to higher within-subject variability and natural noise, so they have a higher potential for stronger

within-subject associations with crystallized composite scores (that is, they are more likely to vary together based on many factors such as time of day and lack of sleep). To demonstrate

this, we fitted models that estimated distinct effects for between-subject and within-subject associations in the ABCD dataset (Methods) and found that there are large between-subject

parameter estimates and small within-subject parameter estimates in total and regional GMV (Fig. 5e, Supplementary Table 13 and section 5.2 in Supplementary Information), whereas the

functional connectivity associations are distributed more evenly across between-subject and within-subject parameters (Fig. 5f). If the between-subject and within-subject associations are

different, these widely used longitudinal models average the two associations (equation (13) in section 5 in Supplementary Information). Fitting these associations separately avoids

averaging the larger effect with the smaller effect and can inform our understanding of brain–behaviour associations (section 5.2 in Supplementary Information). This approach ameliorates the

reduction in standardized effect sizes caused by longitudinal designs for structural brain measures in the ABCD (Extended Data Figs. 5 and 6 and section 5 in Supplementary Information).

This longitudinal model has a similar between-subject standardized effect size to the cross-sectional model (see ‘Estimation of the between-subject and within-subject effects’ in the Methods

section; Extended Data Fig. 6). In short, longitudinal designs can be detrimental to replicability when the between-subject and within-subject effects differ and the model is incorrectly

specified. OPTIMAL DESIGN CONSIDERATIONS With increasing evidence of small standardized effect sizes and low replicability in BWAS, optimizing study design to increase standardized effect

sizes and replicability is a critical prerequisite for progress1,26. Our results demonstrate that standardized effect size and replicability can be increased by enriched sampling of

participants with small and large values of the covariate of interest. This is well known in linear models in which the standardized effect size is explicitly a function of the standard

deviation of the covariate23. We showed that designing a study to have a larger covariate standard deviation increases standardized effect sizes by a median factor of 1.4, even when there is

non-linearity in the association, such as with age and GMV (Supplementary Fig. 1). When the association is very non-monotonic — as in the case of a U-shape relationship between covariate

and outcome — sampling the tails more heavily could decrease replicability and diminish our ability to detect non-linearities in the centre of the study population. In such a case, sampling

to obtain a uniform distribution of the covariate balances power across the range of the covariate and can increase replicability relative to random sampling when the covariate has a normal

distribution in the population. Increasing between-subject variability is beneficial in more than 72% of the association pairs that we studied, despite the presence of such non-linearities

(Extended Data Fig. 3). Because standardized effect sizes are dependent on study design, careful design choices can simultaneously increase standardized effect sizes and study replicability.

Two-phase, extreme group and outcome-dependent sampling designs can inform which participants should be selected for imaging from a larger sample to increase the efficiency and standardized

effect sizes of brain–behaviour associations27,28,29,30,31,32,33. For example, given the high degree of accessibility of cognitive and behavioural testing (for example, to be performed

virtually or electronically), individuals scoring at the extremes on a testing scale or battery (‘phase I’) could be prioritized for subsequent brain scanning (‘phase II’). When there are

multiple covariates of interest, multivariate two-phase designs can be used to increase standardized effect sizes and replicability34. Multivariate designs are also needed to stratify

sampling to avoid confounding by other sociodemographic variables. Together, the use of optimal designs can increase both standardized effect sizes and replicability relative to a design

that uses random sampling31. If desired, weighted regression (such as inverse probability weighting) can be combined with optimized designs to estimate a standardized effect size that is

consistent with the standardized effect size if the study had been conducted in the full population34,35,36. Choosing highly reliable psychometric measurements or interventions (for example,

medications or neuromodulation within a clinical trial)37,38,39 may also be effective for increasing replicability. The decision to pursue an optimized design will depend on other practical

factors, such as the cost and complexity of acquiring other (non-imaging) measures of interest and the specific translational goals of the research. LONGITUDINAL DESIGN CONSIDERATIONS In

the meta-analysis, longitudinal studies of the total GMV–age associations have, on average, more than 380% larger standardized effect sizes than cross-sectional studies. However, in

subsequent analyses, we noticed that the benefit of conducting a longitudinal design is highly dependent on both the between-subject and the within-subject effects. When the between-subject

and the within-subject effects are equal and the within-subject brain measurement error is low, longitudinal studies offer larger standardized effect sizes than cross-sectional studies40

(section 5.1 in Supplementary Information). This combination of equal between-subject and within-subject effects and low within-subject measurement error is the reason that there is a

benefit of longitudinal design in the ADNI for the total GMV–age association (Supplementary Fig. 4). Comparing efficiency per measurement supports the approach of collecting two measurements

per participant in this scenario (section 5.1 in Supplementary Information). Longitudinal models offer the unique ability to separately estimate between-subject and within-subject effects.

When the between-subject and within-subject effects differ but we still fit them with a single effect, we mistakenly assume they are equal, and the interpretation of that coefficient becomes

complicated: the effect becomes a weighted average of the between-subject and within-subject effects whose weights are determined by the study design features (section 5 in Supplementary

Information). The apparent lack of benefit of longitudinal designs in the ABCD on the study of GMV associations is because within-subject changes in the non-brain measures are not associated

with within-subject changes in the GMV (Fig. 5e and Supplementary Table 13). The smaller standardized effect sizes that we found in longitudinal analyses are due to the contribution from

the smaller within-subject effect to the weighted average of the between-subject and within-subject effects (equation (14) in section 5 in Supplementary Information). Fitting the

between-subject and within-subject effects separately prevents averaging the two effects (section 5.2 in Supplementary Information). These two effects are often not directly comparable with

the effect obtained from a cross-sectional model because they have different interpretations41,42,43,44,45 (section 5.2 in Supplementary Information). Using sampling strategies to increase

between-subject and within-subject variability of the covariate will increase the standardized effect sizes for between-subject and within-subject associations, respectively (Extended Data

Fig. 5). DESIGN AND ANALYSIS RECOMMENDATIONS Although it is difficult to provide universal recommendations for study design and analysis, the present study provides general guidelines for

designing and analysing BWAS for optimal standardized effect sizes and replicability based on both empirical and theoretical results (Extended Data Figs. 7 and 8). Although the decision for

a particular design or analysis strategy may depend on unknown features of the brain and non-brain measures and their associations, these characteristics can be evaluated in pilot data or

the analysis dataset (Supplementary Fig. 4 and section 5.2 in Supplementary Information). One general principle that increases standardized effect sizes for most associations is to increase

the covariate standard deviation (for example, through two-phase, extreme group and outcome-dependent sampling), which is practically applicable to a wide range of BWAS contexts.

Longitudinal designs can be helpful and optimal even when the between-subject and within-subject effects differ, if modelled correctly. Moreover, longitudinal BWAS enable us to study

between-subject and within-subject effects separately, and they should be used when the two effects are hypothesized to be different. Although striving for large sample sizes remains

important when designing a study, our findings emphasize the importance of considering other design features to improve standardized effect sizes and replicability of BWAS. METHODS LBCC

DATASET AND PROCESSING The original LBCC dataset included 123,984 MRI scans from 101,457 human participants across more than 100 studies (which include multiple publicly available

datasets46,47,48,49,50,51,52,53,54,55,56) and was described in previous work4 (see Supplementary Information and supplementary table S1 from ref. 4). We filtered to the subset of cognitively

normal participants whose data were processed using FreeSurfer (v6.1). Studies were curated for the analysis by excluding duplicated observations and studies with fewer than 4 unique age

points, sample size less than 20 and/or only participants of one sex. If there were fewer than three participants having longitudinal observations, only the baseline observations were

included and the study was considered cross-sectional. If a participant had changing demographic information during the longitudinal follow-up (for example, changing biological sex), only

the most recent observation was included. We updated the LBCC dataset with the ABCD release 5, resulting in a final dataset that includes 77,695 MRI scans from 60,900 cognitively normal

participants with available total GMV, sGMV and GMV measures across 63 studies (Supplementary Table 1). In this dataset, 74,148 MRI scans from 57,538 participants across 43 studies have

complete-case regional brain measures (regional GMV, regional surface area and regional cortical thickness, based on Desikan–Killiany parcellation20; Supplementary Table 2). The global brain

measure mean cortical thickness was derived using the regional brain measures (see below). STRUCTURAL BRAIN MEASURES Details of data processing have been described in our previous work4. In

brief, total GMV, sGMV and WMV were estimated from T1-weighted and T2-weighted (when available) MRIs using the ‘aseg’ output from FreeSurfer (v6.0.1). All three cerebrum tissue volumes were

extracted from the aseg.stats files output by the recon-all process: ‘Total cortical gray matter volume’ for GMV; ‘Total cerebral white matter volume’ for WMV; and ‘Subcortical gray matter

volume’ for sGMV (inclusive of the thalamus, caudate nucleus, putamen, pallidum, hippocampus, amygdala and nucleus accumbens area; https://freesurfer.net/fswiki/SubcorticalSegmentation).

Regional GMV and cortical thickness across 68 regions (34 per hemisphere, based on Desikan–Killiany parcellation20) were obtained from the aparc.stats files output by the recon-all process.

Mean cortical thickness across the whole brain is the weighted average of the regional cortical thickness weighted by the corresponding regional surface areas. PREPROCESSING SPECIFIC TO ABCD

FUNCTIONAL CONNECTIVITY MEASURES Longitudinal functional connectivity measures were obtained from the ABCD-BIDS community collection, which houses a community-shared and continually updated

ABCD neuroimaging dataset available under Brain Imaging Data Structure (BIDS) standards. The data used in these analyses were processed using the abcd-hcp-pipeline (v0.1.3), an updated

version of The Human Connectome Project MRI pipeline57. In brief, resting-state functional MRI time series were demeaned and detrended, and a generalized linear model was used to regress out

mean white matter, cerebrospinal fluid and global signal, as well as motion variables and then band-pass filtered. High-motion frames (filtered frame displacement > 0.2 mm) were censored

during the demeaning and detrending. After preprocessing, the time series were parcellated using the 352 regions of the Gordon atlas (including 19 subcortical structures) and pairwise

Pearson correlations were computed among the regions. Functional connectivity measures were estimated from resting-state fMRI time series using a minimum of 5 min of data. After Fisher’s

_z_-transformation, the connectivities were averaged across the 24 canonical functional networks25, forming 276 inter-network connectivities and 24 intra-network connectivities. COGNITIVE

AND OTHER COVARIATES The ABCD dataset is a large-scale repository aiming to track the brain and psychological development of over 10,000 children 9–16 years of age by measuring hundreds of

variables, including demographic, physical, cognitive and mental health variables58. We used release 5 of the ABCD study to examine the effect of the sampling schemes on other types of

covariates including cognition (fully corrected _T_-scores of the individual subscales and total composite scores of the NIH Toolbox24), mental health (total problem CBCL syndrome scale) and

other common demographic variables (BMI, birth weight and handedness). For each of the covariates, we evaluated the effect of the sampling schemes on their associations with the global and

regional structural brain measures and functional connectivity after controlling for non-linear age and sex (and for functional connectivity outcomes only, mean frame displacement). For the

analyses of structural brain measures, there were three non-brain covariates with fewer than 5% non-missing follow-ups at both 2-year and 4-year follow-ups (that is, the Dimensional Change

Card Sort Test, Cognition Fluid Composite and Cognition Total Composite Score; Supplementary Table 11), and only their baseline cognitive measurements were included in the analyses. For the

remaining 11 variables (that is, the Picture Vocabulary Test, Flanker Inhibitory Control and Attention Test, List Sorting Working Memory Test, Pattern Comparison Processing Speed Test,

Picture Sequence Memory Test, Oral Reading Recognition Test, Crystallized Composite, CBCL, birth weight, BMI and handedness), all of the available baseline, 2-year and 4-year follow-up

observations were used. For the analyses of the functional connectivity, only the baseline observations for the List Sorting Working Memory Test were used due to missingness (Supplementary

Table 12). The records with BMI lying outside the lower and upper 1% quantiles (that is, BMI < 13.5 or BMI > 36.9) were considered misinput and replaced with missing values. The

variable handedness was imputed using the last observation carried forwards. STATISTICAL ANALYSIS REMOVAL OF SITE EFFECTS For multisite or multistudy neuroimaging studies, it is necessary to

control for potential heterogeneity between sites to obtain unconfounded and generalizable results. Before estimating the main effects of age and sex on the global and regional brain

measures (total GMV, total WMV, total sGMV, mean cortical thickness, regional GMV and regional cortical thickness), we applied ComBat21 and LongComBat22 in cross-sectional datasets and

longitudinal datasets, respectively, to remove the potential site effects. The ComBat algorithm involves several steps including data standardization, site-effect estimation, empirical

Bayesian adjustment, removing estimated site effects and data rescaling. In the analysis of cross-sectional datasets, the models for ComBat were specified as a linear regression model

illustrated below using total GMV: $${\rm{GMV\; \sim \; ns(age,\; d.f.\; =\; 2)\; +\; sex\; +\; site}},$$ where ns denotes natural cubic splines on 2 d.f., which means that there were two

boundary knots and one interval knot placed at the median of the covariate age. Splines were used to accommodate non-linearity in the age effect. For the longitudinal datasets, the model for

LongComBat used a linear mixed effects model with participant-specific random intercepts: $${\rm{GMV\; \sim \; (1| participant)\; +\; ns(age,\; d.f.\; =\; 2)\; +\; sex\; +\; site}}.$$ When

estimating the effects of other non-brain covariates in the ABCD dataset, ComBat was used to control the site effects, respectively, for each of the cross-sectional covariates. The ComBat

models were specified as illustrated below using GMV: $${\rm{GMV\; \sim \; ns(age,\; d.f.\; =\; 2)\; +\; sex}}+x+{\rm{site,}}$$ where _x_ denotes the non-brain covariate. LongComBat was used

for each of the longitudinal covariates with a linear mixed effects model with participant-specific random intercepts only: $${\rm{GMV\; \sim \; (1| participant)\; +\; ns(age,\; d.f.\; =\;

2)\; +\; sex}}+x+{\rm{site.}}$$ When estimating the effects of other covariates on the functional connectivity (FC) in the ABCD data, we additionally controlled for the mean frame

displacement (FD) of the frames remaining after scrubbing. The longComBat models were specified as: $$\begin{array}{l}\text{FC}\, \sim \,(1| \text{participant})+\text{ns}(\text{age,

d.f.}=2)\,+\,\text{sex}\\ \,+\,\text{ns}(\text{mean}\_\text{FD, d. f.}=3+x+\text{site}.\end{array}$$ The Combat and LongComBat were implemented using the neuroCombat59 and longCombat60 R

packages. Site effects were removed before all subsequent analyses including the bootstrap analyses described below. RESI FOR ASSOCIATION STRENGTH The RESI is a recently developed

standardized effect size index that has consistent interpretation across many model types, encompassing all types of test statistics in most regression models17,18. In brief, the RESI is a

standardized effect size parameter describing the deviation of the true parameter value (or values) \(\beta \) from the reference value (or values) \({\beta }_{0}\) from the statistical null

hypothesis \({H}_{0}:\,\beta ={\beta }_{0}\), $$S=\sqrt{{(\beta -{\beta }_{0})}^{T}{{\varSigma }_{\beta }}^{-1}(\beta -{\beta }_{0})},$$ where _S_ denotes the parameter RESI, \(\beta \) and

\({\beta }_{0}\) can be vectors, _T_ denotes the transpose of a matrix, \({\varSigma }_{\beta }\) is the covariance matrix for \(\sqrt{N}\hat{\beta }\) (where \(\hat{\beta }\) is the

estimator for \(\beta \), _N_ is the number of participants; section 3 in Supplementary Information). In previous work, we defined a consistent estimator for RESI17, $$\hat{S}={\left(\max

\left\{0,\frac{{T}^{2}-m}{N}\right\}\right)}^{1/2},$$ where \({{T}}^{2}\) is the chi-squared test statistics \({T}^{2}=N{(\beta -{\beta }_{0})}^{T}{{\varSigma }_{\beta }}^{-1}(\beta -{\beta

}_{0})\) for testing the null hypothesis \({H}_{0}:\,\beta ={\beta }_{0}\), \(m\) is the number of parameters being tested (that is, the length of \(\beta \)) and \(N\) is the number of

participants. As RESI is generally applicable across different models and data types, it is also applicable to the situation where Cohen’s _d_ was defined. In this scenario, the RESI is

equal to ½ Cohen’s _d_17, so Cohen’s suggested thresholds for effect size can be adopted for RESI: small (RESI = 0.1), medium (RESI = 0.25) and large (RESI = 0.4). Because RESI is robust,

when the assumptions of Cohen’s _d_ are not satisfied, such as when the variances between the groups are not equal, RESI is still a consistent estimator, but Cohen’s _d_ is not. The

confidence intervals for RESI in our analyses were constructed using 1,000 non-parametric bootstraps18. The systematic difference in the standardized effect sizes between cross-sectional and

longitudinal studies puts extra challenges on the comparison and aggregation of standardized effect size estimates across studies with different designs. To improve the comparability of

standardized effect sizes between cross-sectional and longitudinal studies, we proposed a new effect size index: the cross-sectional RESI (CS-RESI) for longitudinal datasets. The CS-RESI for

longitudinal datasets represents the RESI in the same study population if the longitudinal study had been conducted cross-sectionally. Detailed definition, point estimator and confidence

interval construction procedure for CS-RESI can be found in section 3 in Supplementary Information. Comprehensive statistical simulation studies were also performed to demonstrate the valid

performance of the proposed estimator and confidence interval for CS-RESI (section 3.2 in Supplementary Information). With CS-RESI, we can quantify the benefit of using a longitudinal study

design in a single dataset (section 3.3 in Supplementary Information). STUDY-LEVEL MODELS After removing the site effects using ComBat or LongComBat in the multisite data, we estimated the

effects of age and sex on each of the global or regional brain measures using GEEs and linear regression models in the longitudinal datasets and cross-sectional datasets, respectively. The

mean model was specified as below after ComBat or LongComBat: $${y}_{ij} \sim {\rm{ns}}({{\rm{age}}}_{ij},{\rm{d.f.}}=2)+{{\rm{sex}}}_{i},$$ where _y__ij_ was taken to be a global brain

measure (that is, total GMV, WMV, sGMV or mean cortical thickness) or regional brain measure (that is, regional GMV or cortical thickness) at the _j_-th visit from the participant _i_ and

_j_ = 1 for cross-sectional datasets. The age effect was estimated with natural cubic splines with 2 d.f., which means that there were two boundary knots and one interval knot placed at the

median of the covariate age. For the GEEs, we used an exchangeable correlation structure as the working structure and identity linkage function. The model assumes the mean was correctly

specified, but made no assumption about the error distribution. The GEEs were fitted with the ‘geepack’ package61 in R. We used the RESI as a standardized effect size measure. RESIs and

confidence intervals were computed using the ‘RESI’ R package (v1.2.0)19. META-ANALYSIS OF THE AGE AND SEX EFFECTS The weighted linear regression model for the meta-analysis of age effects

across the studies was specified as:

$${\widehat{S}}_{{\rm{age}},k}={{\rm{design}}}_{k}+{\rm{ns}}[{\rm{mean}}{({\rm{age}})}_{k},3]+{\rm{ns}}[{\rm{s.d.}}{({\rm{age}})}_{k},3]+{\rm{ns}}[{\rm{skew}}{({\rm{age}})}_{k},3]+{{\epsilon

}}_{k},$$ where \({\widehat{S}}_{{\rm{age}},k}\) denotes the estimated RESI for study _k_, and the weights were the inverse of the standard error of each RESI estimate. The sample mean,

standard deviation (s.d.) and skewness of the age were included as non-linear terms estimated using natural splines with 3 d.f. (that is, two boundary knots plus two interval knots at the

33rd and 66th percentiles of the covariates), and a binary variable indicating the design type (cross-sectional or longitudinal) was also included. The weighted linear regression model for

the meta-analysis of sex effects across the studies was specified as

$${\widehat{S}}_{{\rm{sex}},k}={{\rm{design}}}_{k}+{\rm{ns}}[{\rm{mean}}{({\rm{age}})}_{k},3]+{\rm{ns}}[{\rm{s.d.}}{({\rm{age}})}_{k},3]+{\rm{ns}}[{\rm{pr}}{({\rm{male}})}_{k},3]+{{\epsilon

}}_{k},$$ where \({\widehat{S}}_{{\rm{sex}},k}\) denotes the estimated RESI of sex for study _k_, and the weights were the inverse of the standard error of each RESI estimate. The sample

mean, standard deviation of the age covariate and the proportion of males in each study were included as non-linear terms estimated using natural splines with 3 d.f., and a binary variable

indicating the design type (cross-sectional or longitudinal) was also included. These meta-analyses were performed for each of the global and regional brain measures. Inferences were

performed using robust standard errors62. In the partial regression plots, the expected standardized effect sizes for the age effects were estimated from the meta-analysis model after fixing

mean age at 45 years, standard deviation of age at 7 years and/or skewness at 0; the expected standardized effect sizes for the sex effects were estimated from the meta-analysis model after

fixing mean age at 45 years, standard deviation of age at 7 years and/or proportion of males at 0.5. SAMPLING SCHEMES FOR AGE IN THE UKB AND ADNI We used bootstrapping to evaluate the

effect of different sampling schemes with different target sample covariate distributions on the standardized effect sizes and replicability in the cross-sectional UKB and longitudinal ADNI

datasets. For a given sample size and sampling schemes, 1,000 bootstrap replicates were conducted. The standardized effect size was estimated as the mean standardized effect size (that is,

RESI) across the bootstrap replicates. The 95% confidence interval for the standardized effect size was estimated using the lower and upper 2.5% quantiles across the 1,000 estimates of the

standardized effect size in the bootstrap replicates. Power was calculated as the proportion of bootstrap replicates producing _P_ values less than or equal to 5% for those associations that

were significant at 0.05 in the full sample. In the UKB, only one region was not significant for age in each of GMV and cortical thickness, and in the ADNI, only one and four regions were

not significant for age in GMV and cortical thickness, respectively. Replicability in previous work has been defined as having a significant _P_ value and the same sign for the regression

coefficient. Because we were fitting non-linear effects, we defined replicability as the probability that two independent studies have significant _P_ values; this is equivalent to the

definition of power squared. The 95% confidence intervals for replicability were derived using Wilson’s method63. In the UKB dataset, to modify the (between-subject) variability of the age

variable, we used the following three target sampling distributions (Extended Data Fig. 1a): bell shaped, where the target distribution had most of the participants distributed in the middle

age range; uniform, where the target distribution had participants equally distributed across the age range; and U shaped, where the target distribution had most of the participants

distributed closer to the range limits of the age in the study. The samples with U-shaped age distribution had the largest sample variance of age, followed by the samples with uniform age

distribution and the samples with bell-shaped age distribution. The bell-shaped and U-shaped functions were proportional to a quadratic function. To sample according to these distributions,

each record was first inversely weighted by the frequency of the records with age falling in the range of ±0.5 years of the age for that record to achieve the uniform sampling distribution.

Each record was then rescaled to derive the weights for bell-shaped and U-shaped sampling distributions. The records with age < 50 or age > 78 years were winsorized at 50 or 78 years

when assigning weights, respectively, to limit the effects of outliers on the weight assignment, but the actual age values were used when analysing each bootstrapped data. In each bootstrap

from the ADNI dataset, each participant was sampled to have two records. We modified the between-subject and within-subject variability of age, respectively, by making the ‘baseline age’

follow one of the three target sampling distributions used for the UKB dataset and the ‘age change’ independently follow one of three new distributions: decreasing, uniform and increasing

(Extended Data Fig. 1b,c). The increasing and decreasing functions were proportional to an exponential function. The samples with increasing distribution of age change had the largest

within-subject variability of age, followed by the samples with the uniform distribution of age change and the samples with decreasing distribution of age change. To modify the baseline age

and the age change from baseline independently, we first created all combinations of the baseline record and one follow-up from each participant, and derived the baseline age and age change

for each combination. The ‘bivariate’ frequency of each combination was obtained as the number of combinations with values of baseline age and age change falling in the range of ±0.5 years

of the values of baseline age and age change for this combination. Then, each combination was inversely weighted by its bivariate frequency to target a uniform bivariate distribution of

baseline age and age change. The weight for each combination was then rescaled to make the baseline age and age change follow different sampling distributions independently. The combinations

with baseline age < 65 or age > 85 years were winsorized at 65 or 85 years, and the combinations with age change greater than 5 years were winsorized at 5 years when assigning weights

to limit the effects of outliers on the weight assignment, but the actual ages were used when analysing each bootstrapped data. The sampling methods could be easily extended to the scenario

in which each participant had three records (and more than three) in the bootstrap data by making combinations of the baseline and two follow-ups. Each combination was inversely weighted to

achieve uniform baseline age and age change distributions, respectively, by the ‘trivariate’ frequency of the combinations with baseline age and the two age changes from baseline for the

two follow-ups falling into the range of ±0.5 years of the corresponding values for this combination. As we only investigated the effect of modifying the number of measurements per

participant under uniform between-subject and within-subject sampling schemes (Fig. 3c,d), we did not need to consider rescaling the weights here to achieve other sampling distributions but

they could be done similarly. For the scenario in which each participant only had one measurement (Fig. 3c,d), the standardized effect sizes and replicability were estimated only using the

baseline measurements. All site effects were removed using ComBat or LongComBat before performing the bootstrap analysis. SAMPLING SCHEMES FOR OTHER NON-BRAIN COVARIATES IN THE ABCD We used

bootstrapping to study how different sampling strategies affect the RESI in the ABCD dataset. Each participant in the bootstrap data had two measurements. We applied the same weight

assignment method described above for the ADNI dataset to modify the between-subject and within-subject variability of a covariate. We made the baseline covariate and the change in covariate

follow bell-shaped and/or U-shaped distributions, to let the sample have larger or smaller between-subject and/or within-subject variability of the covariate, respectively. The baseline

covariate and change of covariate were winsorized at the upper and lower 5% quantiles to limit the effect of outliers on sampling. For each cognitive variable, only the participants with

non-missing baseline measurements and at least one non-missing follow-up were included. Generalized linear models and GEEs were fitted to estimate the effect of each non-brain covariate on

the structural brain measures after controlling for age and sex, $${\rm{GMV}} \sim {\rm{ns}}({\rm{age,\; d.f.}}=2)+{\rm{sex}}+x,$$ where _x_ denotes one of the non-brain covariates. For the

GEEs, we used an exchangeable correlation structure as the working structure and identity linkage function. Only the between-subject sampling schemes were applied for the non-brain

covariates that were stable over time (for example, birth weight and handedness). In other words, the participants were sampled based on their baseline covariate values, and then a follow-up

was selected randomly for each participant. The sampling schemes to increase the between-subject variability in the covariate handedness, which was a binary variable (right-handed or not),

was specified differently. The expected proportion of right-handed participants in the bootstrap samples was 50% under the sampling scheme with larger between-subject variability and 10%

under the sampling scheme with smaller between-subject variability. For given between-subject and/or within-subject sampling schemes, we obtained 1,000 bootstrap replicates. The standardized

effect size was estimated as the mean standardized effect size across the bootstrap replicates. The 95% confidence intervals for standardized effect size were estimated using the lower and

upper 2.5% quantiles across the 1,000 estimates of the standardized effect size in the bootstrap replicates. The sample sizes needed for 80% replicability were estimated based on the (mean)

estimated standardized effect size and _F_-distribution (see below). ANALYSIS OF FUNCTIONAL CONNECTIVITY IN THE ABCD In a subset of the ABCD in which we have preprocessed longitudinal

functional connectivity data at two time points (baseline and 2-year follow-up), we only restricted our analysis to the participants with non-missing measurements at both of the two time

points. In the GEEs used to estimate the effects of non-brain covariates on functional connectivity, the mean model was specified as below after LongComBat: $${y}_{ij} \sim

{\rm{ns}}({{\rm{age}}}_{ij},{\rm{d.f.}}=2)+{{\rm{sex}}}_{i}+{\rm{ns}}({\rm{mean}}\_{\rm{FD}},\mathrm{d.f.}=3)+x,$$ where _y__ij_ was taken to be a functional connectivity outcome, and _x_

denotes a non-brain covariate. The mean frame-wise displacement (mean_FD) was also included as a covariate with natural cubic splines with 3 d.f. We used an exchangeable correlation

structure as the working structure and identity linkage function in the GEEs. The frame count of each scan was used as the weights. When evaluating the effect of different sampling schemes

on the standardized effect sizes, we obtained 1,000 bootstrap replicates for given between-subject and/or within-subject sampling schemes. The standardized effect size was estimated as the

mean standardized effect size across the bootstrap replicates. Confidence intervals were computed as described above. The sample sizes needed for 80% replicability were estimated based on

the (mean) estimated standardized effect sizes and _F_-distribution (see below). SAMPLE SIZE CALCULATION FOR A TARGET POWER OR REPLICABILITY WITH A GIVEN STANDARDIZED EFFECT SIZE After

estimating the standardized effect size for an association, the calculation of the corresponding sample size _N_ needed for detecting this association with _γ_ × 100% power at significance

level of _α_ was based on an _F_-distribution. d.f. denotes the total degree of freedom of the analysis model, \(F(z;\lambda )\) denotes the cumulative density function for a random variable

_z_, which follows the (non-central) _F_-distribution with degrees of freedom being 1 and _N_ − d.f. and non-centrality parameter \(\lambda \). The corresponding sample size _N_ is:

$$N=\{N:F({F}^{-1}(1-\alpha )\,;\lambda =N{\hat{S}}^{2})=\gamma \},$$ where \(\hat{S}\) is the estimated RESI for the standardized effect size. Power curves for the RESI are given in figure

3 of Vandekar et al.17. Replicability was defined as the probability that two independent studies have significant _P_ values, which is equivalent to power squared. ESTIMATION OF THE

BETWEEN-SUBJECT AND WITHIN-SUBJECT EFFECTS For the non-brain covariates that were analysed longitudinally in the ABCD dataset, GEEs with exchangeable correlation structures were fitted to

estimate their cross-sectional and longitudinal effects on structural and functional brain measures after controlling for age and sex, respectively. The mean model was specified as

illustrated with GMV: $${\rm{GMV\; \sim \; ns(age,\; d.f.\; =\; 2)\; +\; sex}}+X\_{\rm{bl}}+X\_{\rm{change,}}$$ where _X__bl denotes the participant-specific baseline covariate values, and

the _X__change denotes the difference of the covariate value at each visit to the participant-specific baseline covariate value (see section 5.2 in Supplementary Information). The

participants without baseline measures were not included in the modelling. The model coefficients for the terms _X__bl and _X__change represent the between-subject and within-subject effects

of this non-brain covariate on total GMV, respectively. For the functional connectivity data, the same covariates and weighting were used as described above. Using the first time point as

the between-subject term was a special case that ensured that comparing the parameter using the baseline cross-sectional model was equal to the parameter for the between-subject effect in

the longitudinal model. In this model, the between-subject variance was defined as the variance of the baseline measurement, and the within-subject variance was the mean square of

_X__change. This model specification ensured that the sampling schemes independently affected the between-subject and within-subject variances separately (equation (16) in section 5.2 in

Supplementary Information). REPORTING SUMMARY Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article. DATA AVAILABILITY

Participant-level data from many datasets are available according to study-level data access rules. Study-level model parameters are available at https://github.com/KaidiK/RESI_BWAS. We

acknowledge the usage of several openly shared MRI datasets, which are available at the respective consortia websites and are subject to the sharing policies of each consortium: OpenNeuro

(https://openneuro.org/), UKB (https://www.ukbiobank.ac.uk/), ABCD (https://abcdstudy.org/), the Laboratory of NeuroImaging (https://loni.usc.edu/), data made available through the Open

Science Framework (https://osf.io/), the Human Connectome Project (http://www.humanconnectomeproject.org/) and the OpenPain project (https://www.openpain.org). The ABCD data repository grows

and changes over time. The ABCD data used in this paper are from the NIMH Data Archive (https://doi.org/10.15154/1503209) and the ABCD BIDS Community Collection (ABCC;

https://collection3165.readthedocs.io). Data used in this article were provided by the Brain Consortium for Reliability, Reproducibility and Replicability (3R-BRAIN)

(https://github.com/zuoxinian/3R-BRAIN). Data used in the preparation of this article were obtained from the Australian Imaging Biomarkers and Lifestyle (AIBL) flagship study of ageing

funded by the Commonwealth Scientific and Industrial Research Organisation (CSIRO), which was made available at the ADNI database

(https://adni.loni.usc.edu/aibl-australian-imaging-biomarkers-and-lifestyle-study-of-ageing-18-month-data-now-released/). The AIBL researchers contributed data but did not participate in

analysis or writing of this report. AIBL researchers are listed at https://www.aibl.csiro.au. Data used in preparation of this article were obtained from the ADNI database

(https://adni.loni.usc.edu/). The investigators within the ADNI contributed to the design and implementation of the ADNI and/or provided data but did not participate in analysis or writing

of this report. A complete listing of ADNI investigators can be found at https://adni.loni.usc.edu/wp-content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf. More information on the

ARWIBO Consortium can be found at https://www.arwibo.it/. More information on CALM team members can be found at https://calm.mrc-cbu.cam.ac.uk/team/ and in the Supplementary Information.

Data used in this article were obtained from the developmental component ‘Growing Up in China’ of the Chinese Color Nest Project (http://deepneuro.bnu.edu.cn/?p=163). Data used in the

preparation of this article were obtained from the IConsortium on Vulnerability to Externalizing Disorders and Addictions (c-VEDA), India (https://cveda-project.org/). Data used in the

preparation of this article were obtained from the Harvard Aging Brain Study (HABS P01AG036694; https://habs.mgh.harvard.edu). Data used in the preparation of this article were obtained from

the IMAGEN Consortium (https://imagen-europe.com/). The POND Network (https://pond-network.ca/) is a Canadian translational network in neurodevelopmental disorders, primarily funded by the

Ontario Brain Institute. The LBCC dataset used in the preparation of this article includes data obtained from the ADNI database (https://adni.loni.usc.edu). The ADNI was launched in 2003 as

a public–private partnership, led by Principal Investigator M. W. Weiner. The primary goal of the ADNI has been to test whether serial MRI, positron emission tomography, other biological

markers, and clinical and neuropsychological assessment can be combined to measure the progression of mild cognitive impairment and early Alzheimer’s disease. Its data collection and sharing

for this project were funded by the ADNI (National Institutes of Health grant U01 AG024904) and Department of Defense ADNI (Department of Defense award number W81XWH-12-2-0012). ADNI is

funded by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, and through contributions from the following: AbbVie, Alzheimer’s Association;

Alzheimer’s Drug Discovery Foundation; Araclon Biotech; BioClinica; Biogen; Bristol-Myers Squibb; CereSpir; Cogstate; Eisai; Elan Pharmaceuticals; Eli Lilly and Company; EuroImmun; F.

Hoffmann-La Roche and its affiliated company Genentech; Fujirebio; GE Healthcare; IXICO; Janssen Alzheimer Immunotherapy Research & Development; Johnson & Johnson Pharmaceutical

Research & Development; Lumosity; Lundbeck; Merck & Co.; Meso Scale Diagnostics; NeuroRx Research; Neurotrack Technologies; Novartis Pharmaceuticals Corporation; Pfizer; Piramal

Imaging; Servier; Takeda Pharmaceutical Company; and Transition Therapeutics. The Canadian Institutes of Health Research are providing funds to support ADNI clinical sites in Canada. Private

sector contributions are facilitated by the Foundation for the National Institutes of Health (www.fnih.org). The grantee organization is the Northern California Institute for Research and

Education, and the study is coordinated by the Alzheimer’s Therapeutic Research Institute at the University of Southern California. ADNI data are disseminated by the Laboratory for

NeuroImaging at the University of Southern California. CODE AVAILABILITY All code used to produce the analyses presented in this study is available at https://github.com/KaidiK/RESI_BWAS.

REFERENCES * Marek, S. et al. Reproducible brain-wide association studies require thousands of individuals. _Nature_ 603, 654–660 (2022). Article ADS CAS PubMed PubMed Central Google

Scholar * Owens, M. M. et al. Recalibrating expectations about effect size: a multi-method survey of effect sizes in the ABCD study. _PLoS ONE_ 16, e0257535 (2021). Article CAS PubMed

PubMed Central Google Scholar * Spisak, T., Bingel, U. & Wager, T. D. Multivariate BWAS can be replicable with moderate sample sizes. _Nature_ 615, E4–E7 (2023). Article ADS CAS

PubMed PubMed Central Google Scholar * Bethlehem, Ra. I. et al. Brain charts for the human lifespan. _Nature_ 604, 525–533 (2022). Article CAS PubMed PubMed Central Google Scholar *

Nosek, B. A. et al. Replicability, robustness, and reproducibility in psychological science. _Annu. Rev. Psychol._ 73, 719–748 (2022). Article PubMed Google Scholar * Patil, P., Peng, R.

D. & Leek, J. T. What should we expect when we replicate? A statistical view of replicability in psychological science. _Perspect. Psychol. Sci. J. Assoc. Psychol. Sci._ 11, 539–544

(2016). Article Google Scholar * Liu, S., Abdellaoui, A., Verweij, K. J. H. & van Wingen, G. A. Replicable brain–phenotype associations require large-scale neuroimaging data. _Nat.

Hum. Behav._ 7, 1344–1356 (2023). Article PubMed Google Scholar * Button, K. S. et al. Power failure: why small sample size undermines the reliability of neuroscience. _Nat. Rev.

Neurosci._ 14, 365–376 (2013). Article CAS PubMed Google Scholar * Szucs, D. & Ioannidis, J. P. Sample size evolution in neuroimaging research: an evaluation of highly-cited studies

(1990–2012) and of latest practices (2017–2018) in high-impact journals. _NeuroImage_ 221, 117164 (2020). Article PubMed Google Scholar * Reddan, M. C., Lindquist, M. A. & Wager, T.

D. Effect size estimation in neuroimaging. _JAMA Psychiatry_ 74, 207–208 (2017). Article PubMed Google Scholar * Vul, E. & Pashler, H. Voodoo and circularity errors. _NeuroImage_ 62,

945–948 (2012). Article PubMed Google Scholar * Vul, E., Harris, C., Winkielman, P. & Pashler, H. Puzzlingly high correlations in fMRI studies of emotion, personality, and social

cognition. _Perspect. Psychol. Sci. J. Assoc. Psychol. Sci._ 4, 274–290 (2009). Article Google Scholar * Nee, D. E. fMRI replicability depends upon sufficient individual-level data.

_Commun. Biol._ 2, 130 (2019). Article PubMed PubMed Central Google Scholar * Smith, P. L. & Little, D. R. Small is beautiful: in defense of the small-N design. _Psychon. Bull. Rev._

25, 2083–2101 (2018). Article PubMed PubMed Central Google Scholar * Klapwijk, E. T., van den Bos, W., Tamnes, C. K., Raschle, N. M. & Mills, K. L. Opportunities for increased

reproducibility and replicability of developmental neuroimaging. _Dev. Cogn. Neurosci._ 47, 100902 (2020). Article PubMed PubMed Central Google Scholar * Lawless, J. F., Kalbfleisch, J.

D. & Wild, C. J. Semiparametric methods for response-selective and missing data problems in regression. _J. R. Stat. Soc. Ser. B Stat. Methodol._ 61, 413–438 (1999). Article MathSciNet

Google Scholar * Vandekar, S., Tao, R. & Blume, J. A robust effect size index. _Psychometrika_ 85, 232–246 (2020). Article MathSciNet PubMed PubMed Central Google Scholar * Kang,

K. et al. Accurate confidence and Bayesian interval estimation for non-centrality parameters and effect size indices. _Psychometrika_ https://doi.org/10.1007/s11336-022-09899-x (2023). *

Jones, M., Kang, K. & Vandekar, S. RESI: an R package for robust effect sizes. Preprint at https://arxiv.org/abs/2302.12345 (2023). * Desikan, R. S. et al. An automated labeling system

for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. _NeuroImage_ 31, 968–980 (2006). Article PubMed Google Scholar * Johnson, W. E., Li, C. &

Rabinovic, A. Adjusting batch effects in microarray expression data using empirical Bayes methods. _Biostatistics_ 8, 118–127 (2007). Article PubMed Google Scholar * Beer, J. C. et al.

Longitudinal ComBat: a method for harmonizing longitudinal multi-scanner imaging data. _NeuroImage_ 220, 117129 (2020). Article PubMed Google Scholar * Boos, D. D. & Stefanski, L. A.

_Essential Statistical Inference: Theory and Methods_ (Springer-Verlag, 2013). * Carlozzi, N. E. et al. Construct validity of the NIH Toolbox cognition battery in individuals with stroke.

_Rehabil. Psychol._ 62, 443–454 (2017). Article PubMed PubMed Central Google Scholar * Gordon, E. M. et al. Generation and evaluation of a cortical area parcellation from resting-state

correlations. _Cereb. Cortex_ 26, 288–303 (2016). Article PubMed Google Scholar * Noble, S., Mejia, A. F., Zalesky, A. & Scheinost, D. Improving power in functional magnetic resonance

imaging by moving beyond cluster-level inference. _Proc. Natl Acad. Sci. USA_ 119, e2203020119 (2022). Article CAS PubMed PubMed Central Google Scholar * Tao, R., Zeng, D. & Lin,

D.-Y. Optimal designs of two-phase studies. _J. Am. Stat. Assoc._ 115, 1946–1959 (2020). Article MathSciNet CAS PubMed Google Scholar * Schildcrout, J. S., Garbett, S. P. &

Heagerty, P. J. Outcome vector dependent sampling with longitudinal continuous response data: stratified sampling based on summary statistics. _Biometrics_ 69, 405–416 (2013). Article

MathSciNet PubMed Google Scholar * Tao, R., Zeng, D. & Lin, D.-Y. Efficient semiparametric inference under two-phase sampling, with applications to genetic association studies. _J.

Am. Stat. Assoc._ 112, 1468–1476 (2017). Article MathSciNet CAS PubMed PubMed Central Google Scholar * Fisher, J. E., Guha, A., Heller, W. & Miller, G. A. Extreme-groups designs in

studies of dimensional phenomena: advantages, caveats, and recommendations. _J. Abnorm. Psychol._ 129, 14–20 (2020). Article PubMed Google Scholar * Preacher, K. J., Rucker, D. D.,

MacCallum, R. C. & Nicewander, W. A. Use of the extreme groups approach: a critical reexamination and new recommendations. _Psychol. Methods_ 10, 178–192 (2005). Article PubMed Google

Scholar * Amanat, S., Requena, T. & Lopez-Escamez, J. A. A systematic review of extreme phenotype strategies to search for rare variants in genetic studies of complex disorders. _Genes_

11, 987 (2020). Article CAS PubMed PubMed Central Google Scholar * Lotspeich, S. C., Amorim, G. G. C., Shaw, P. A., Tao, R. & Shepherd, B. E. Optimal multiwave validation of

secondary use data with outcome and exposure misclassification. _Can. J. Stat_. https://doi.org/10.1002/cjs.11772 (2023). * Tao, R. et al. Analysis of sequence data under multivariate

trait-dependent sampling. _J. Am. Stat. Assoc._ 110, 560–572 (2015). Article MathSciNet CAS PubMed PubMed Central Google Scholar * Lin, H. et al. Strategies to design and analyze

targeted sequencing data. _Circ. Cardiovasc. Genet._ 7, 335–343 (2014). Article PubMed PubMed Central Google Scholar * Tao, R., Lotspeich, S. C., Amorim, G., Shaw, P. A. & Shepherd,

B. E. Efficient semiparametric inference for two-phase studies with outcome and covariate measurement errors. _Stat. Med._ 40, 725–738 (2021). Article MathSciNet PubMed Google Scholar *

Nikolaidis, A. et al. Suboptimal phenotypic reliability impedes reproducible human neuroscience. Preprint at _bioRxiv_ https://doi.org/10.1101/2022.07.22.501193 (2022). * Xu, T. et al. ReX:

an integrative tool for quantifying and optimizing measurement reliability for the study of individual differences. _Nat. Methods_ 20, 1025–1028 (2023). Article CAS PubMed Google Scholar

* Gell, M. et al. The burden of reliability: how measurement noise limits brain–behaviour predictions. Preprint at _bioRxiv_ https://doi.org/10.1101/2023.02.09.527898 (2024). * Diggle P.,

Heagerty P., Liang K.-Y. & Zeger S. _Analysis of Longitudinal Data_ 2nd edn (Oxford Univ. Press, 2013). * Pepe, M. S. & Anderson, G. L. A cautionary note on inference for marginal

regression models with longitudinal data and general correlated response data. _Commun. Stat. Simul. Comput_. https://doi.org/10.1080/03610919408813210 (1994). * Begg, M. D. & Parides,

M. K. Separation of individual-level and cluster-level covariate effects in regression analysis of correlated data. _Stat. Med._ 22, 2591–2602 (2003). Article PubMed Google Scholar *

Curran, P. J. & Bauer, D. J. The disaggregation of within-person and between-person effects in longitudinal models of change. _Annu. Rev. Psychol._ 62, 583–619 (2011). Article PubMed

PubMed Central Google Scholar * Di Biase, M. A. et al. Mapping human brain charts cross-sectionally and longitudinally. _Proc. Natl Acad. Sci. USA_ 120, e2216798120 (2023). Article CAS

PubMed PubMed Central Google Scholar * Guillaume, B., Hua, X., Thompson, P. M., Waldorp, L. & Nichols, T. E. Fast and accurate modelling of longitudinal and repeated measures

neuroimaging data. _NeuroImage_ 94, 287–302 (2014). Article PubMed Google Scholar * The ADHD-200 Consortium. The ADHD-200 Consortium: a model to advance the translational potential of

neuroimaging in clinical neuroscience. _Front. Syst. Neurosci._ 6, 62 (2012). PubMed Central Google Scholar * Di Martino, A. et al. The autism brain imaging data exchange: towards a

large-scale evaluation of the intrinsic brain architecture in autism. _Mol. Psychiatry_ 19, 659–667 (2014). Article PubMed Google Scholar * Snoek, L. et al. AOMIC-PIOP1. _OpenNeuro_

https://doi.org/10.18112/openneuro.ds002785.v2.0.0 (2020). * Snoek, L. et al. AOMIC-PIOP2. _OpenNeuro_ https://doi.org/10.18112/openneuro.ds002790.v2.0.0 (2020). * Snoek, L. et al.

AOMIC-ID1000. _OpenNeuro_ https://doi.org/10.18112/openneuro.ds003097.v1.2.1 (2021). * Bilder, R. et al. UCLA Consortium for Neuropsychiatric Phenomics LA5c study. _OpenNeuro_

https://doi.org/10.18112/openneuro.ds000030.v1.0.0 (2020). * Nastase, S. A. et al. Narratives. _OpenNeuro_ https://doi.org/10.18112/openneuro.ds002345.v1.1.4 (2020). * Alexander, L. M. et

al. An open resource for transdiagnostic research in pediatric mental health and learning disorders. _Sci. Data_ 4, 170181 (2017). Article PubMed PubMed Central Google Scholar *

Richardson, H., Lisandrelli, G., Riobueno-Naylor, A. & Saxe, R. Development of the social brain from age three to twelve years. _Nat. Commun._ 9, 1027 (2018). Article ADS PubMed

PubMed Central Google Scholar * Kuklisova-Murgasova, M. et al. A dynamic 4D probabilistic atlas of the developing brain. _NeuroImage_ 54, 2750–2763 (2011). Article PubMed Google Scholar

* Reynolds, J. E., Long, X., Paniukov, D., Bagshawe, M. & Lebel, C. Calgary preschool magnetic resonance imaging (MRI) dataset. _Data Brief._ 29, 105224 (2020). Article PubMed PubMed

Central Google Scholar * Feczko, E. et al. Adolescent Brain Cognitive Development (ABCD) community MRI collection and utilities. Preprint at _bioRxiv_

https://doi.org/10.1101/2021.07.09.451638 (2021). * Casey, B. J. et al. The Adolescent Brain Cognitive Development (ABCD) study: imaging acquisition across 21 sites. _Dev. Cogn. Neurosci._

32, 43–54 (2018). Article CAS PubMed PubMed Central Google Scholar * Fortin J.-P. neuroCombat: harmonization of multi-site imaging data with ComBat. R package version 1.0.13 (2023). *

Beer, J. longCombat: longitudinal ComBat for harmonizing multi-batch longitudinal data. R package version 0.0.0.90000; https://github.com/jcbeer/longCombat (2020). * Højsgaard, S., Halekoh,

U., Yan, J. & Ekstrøm, C. T. geepack: Generalized estimating equation package; https://cran.r-project.org/web/packages/geepack/index.html (2022). * Long, J. S. & Ervin, L. H. Using

heteroscedasticity consistent standard errors in the linear regression model. _Am. Stat._ 54, 217–224 (2000). Article Google Scholar * Agresti, A. & Coull, B. A. Approximate is better

than ‘exact’ for interval estimation of binomial proportions. _Am. Stat._ 52, 119–126 (1998). MathSciNet Google Scholar Download references ACKNOWLEDGEMENTS S.V. was supported by

R01MH123563 from the National Institute of Mental Health (NIMH). A.A.-B. and J.Seidlitz were partially supported by R01MH132934 and R01MH133843 from the NIMH. B.T.-C. was supported by

K23DA057486 from National Institute of Drug Abuse (NIDA). T.D.S. was supported by R01MH120482, R01MH112847, R01MH113550 and R37MH125829 from the NIMH, R01EB022573 from the National Institute

of Biomedical Imaging and Bioengineering (NIBIB), the AE Foundation and the Penn-CHOP Lifespan Brain Institute. B.L. was supported by R00MH127293 from the NIMH. D.F. was supported by

U01DA041148R and U24DA055330 from the NIDA, R37MH125829, R01MH096773 and R01MH115357 from the NIMH, the Masonic Institute for the Developing Brain, and the Lynne and Andrew Redleaf

Foundation. Data processing done at the University of Cambridge is supported by the NIHR Cambridge Biomedical Research Centre (BRC-1215-20014) and NIHR Applied Research Collaboration East of

England. Data used in the preparation of this article include data obtained from the ADNI database (https://adni.loni.usc.edu). As such, the investigators in the ADNI contributed to the

design and implementation of the ADNI and/or provided data, but did not participate in the analysis or writing of this report. A complete list of the ADNI investigators is available

(https://adni.loni.usc.edu/wp-content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf). Data were used from the following consortia: 3R-BRAIN, AIBL, Alzheimer’s Disease Neuroimaging

Initiative (ADNI), Alzheimer’s Disease Repository Without Borders Investigators, CALM Team, CCNP, COBRE, cVEDA, Harvard Aging Brain Study, IMAGEN, POND, and The PREVENT-AD Research Group;

and lists of members and their affiliations appears in the Supplementary Information. Any views expressed are those of the authors and not necessarily those of the funders, IHU-JU2, the NIHR

or the Department of Health and Social Care. AUTHOR INFORMATION Author notes * These authors contributed equally: Aaron Alexander-Bloch, Simon Vandekar * A full list of members and their