Dilfm: an artifact-suppressed and noise-robust light-field microscopy through dictionary learning

Dilfm: an artifact-suppressed and noise-robust light-field microscopy through dictionary learning"

- Select a language for the TTS:

- UK English Female

- UK English Male

- US English Female

- US English Male

- Australian Female

- Australian Male

- Language selected: (auto detect) - EN

Play all audios:

ABSTRACT Light field microscopy (LFM) has been widely used for recording 3D biological dynamics at camera frame rate. However, LFM suffers from artifact contaminations due to the illness of

the reconstruction problem via naïve Richardson–Lucy (RL) deconvolution. Moreover, the performance of LFM significantly dropped in low-light conditions due to the absence of sample priors.

In this paper, we thoroughly analyze different kinds of artifacts and present a new LFM technique termed dictionary LFM (DiLFM) that substantially suppresses various kinds of reconstruction

artifacts and improves the noise robustness with an over-complete dictionary. We demonstrate artifact-suppressed reconstructions in scattering samples such as _Drosophila_ embryos and

brains. Furthermore, we show our DiLFM can achieve robust blood cell counting in noisy conditions by imaging blood cell dynamic at 100 Hz and unveil more neurons in whole-brain calcium

recording of zebrafish with low illumination power in vivo. SIMILAR CONTENT BEING VIEWED BY OTHERS COMPUTATIONAL OPTICAL SECTIONING WITH AN INCOHERENT MULTISCALE SCATTERING MODEL FOR

LIGHT-FIELD MICROSCOPY Article Open access 04 November 2021 REAL-TIME DENOISING ENABLES HIGH-SENSITIVITY FLUORESCENCE TIME-LAPSE IMAGING BEYOND THE SHOT-NOISE LIMIT Article Open access 26

September 2022 MIRROR-ENHANCED SCANNING LIGHT-FIELD MICROSCOPY FOR LONG-TERM HIGH-SPEED 3D IMAGING WITH ISOTROPIC RESOLUTION Article Open access 04 November 2021 INTRODUCTION Cellular

motions and activities in vivo are usually in millisecond time-scale and in 3D space, including voltage and calcium transients of neurons1,2, blood cell flows in beating hearts3, and

membrane dynamics in embryo cells4. Observing and understanding these fantastic phenomena requires abilities to record cellular structures with a high spatiotemporal resolution in 3D. Many

techniques are developed to meet this requirement, including confocal5,6 and multiphoton scanning microscope7, selective plane8, and structured illumination microscopy9. Although these

techniques can access the 3D structures in combination with depth scanning, the temporal resolution is limited by the inertia of the scanning devices or the single-plane recording rate.

Therefore, a number of advanced techniques with multiplexing techniques and optimized sampling strategies have been introduced, such as multiplane or multifocal imaging10, scanning temporal

focusing microscopy11, and random access microscopy12. However, heat tolerance of living animals or organs and sample density still prevent those methods to achieve high throughput with low

light doses. Light field microscopy (LFM) emerges as a popular tool in incoherent imaging of volumetric biological samples within a single shot13,14,15,16,17,18,19. This is achieved by

capturing a 4D light field on a single 2D array detector through specific optical components such as the microlens array (MLA). The 3D information of biological samples is extracted from 4D

light field measurements through multiple Richardson-Lucy (RL) reconstruction iterations20,21. The lack of a scanning device makes LFM a high-speed volumetric imaging tool for biological

systems, with various applications in live-cell imaging18, volumetric imaging of beating hearts and blood flow22, and neural recording23,24, to name a few. Although LFM has achieved great

success, current LFM implementations suffer several disadvantages: (1) inherent trade-offs between improving reconstruction contrast and reducing ringing effects at edges; (2) severe

block-wise artifacts near the native image plane (NIP)13; (3) contaminations to 3D-resolved structures from depth crosstalk; and (4) quick performance degradation under low single-to-noise

ratio (SNR) situations. The reasons for these drawbacks are due to low spatial sampling and illness of restoring 3D information from 2D sensor images. Many methods are proposed to mitigate

parts of these drawbacks. To avoid NIP artifact, it is straightforward to carefully set the imaging volume on one side of the NIP23, which reduces the imaging depth range a lot. Shifting the

MLA to avoid NIP will sacrifice the depth-of-field18. Methods that reshape PSF25 or add additional views22,26 can help ease edge ringing and improve the contrast, but either require

customized optical components or complicate the system by adding scanning devices or more objectives. The additional complexity of adding more hardware also hampers the usage of LFM in

freely behaving animals for volumetric functional imaging due to space and weight limitation27. On the other hand, modifying the reconstruction algorithm to mitigate the LFM artifacts is

more convenient and flexible since adjusting the hardware is avoided. Introducing a strong blur in the reconstructed volume can reduce the NIP block-wise artifacts and depth crosstalk, but

the imaging resolution will be much worse28. The phase space reconstruction approach by Zhi et al. achieves faster convergence and reduces NIP block-wise artifacts through serially

reconstructing different angular views29 but cannot solve the contrast and ringing dilemma. All these implements suffer from noise-induced artifacts when imaging phototoxicity-sensitive

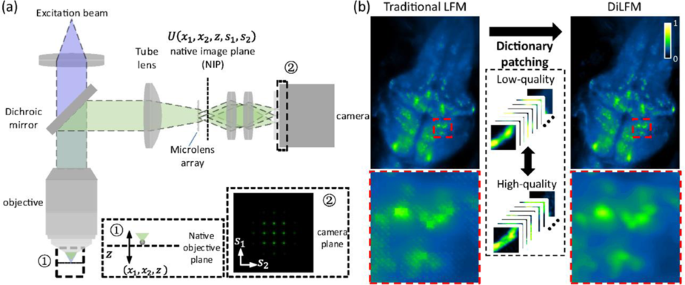

samples like mitochondria and zebrafish embryos. Here, we propose a new LFM method based on dictionary patching, termed DiLFM, to enable fast, robust, and artifact-suppressed volumetric

imaging under different noisy conditions without hardware modifications (Fig. 1). Our approach is motivated by recent results in sparse signal representation, suggesting that artifact-free

signals can be well represented using a linear combination of few elements from a redundant dictionary even under heavily noisy conditions30. The systematic artifacts due to the low sampling

rate in LFM can be compensated by dictionary priors learned from general biological samples. Our DiLFM reconstruction is a combination of a few RL iterations to provide basic but

ringing-reduced 3D volumes with a dictionary patching process to fix the reconstruction artifacts and improve the resolution and contrast (Fig. S1). We train a pair of low- and high-fidelity

dictionaries under LFM forward model such that only matched low-fidelity snippets from RL reconstructions will be updated by high-fidelity elements. With the robustness of both few-run RL

and dictionary patching in low-SNR conditions, our DiLFM provides superior performances over other methods under noise contaminations. We demonstrate the contrast improvement and artifacts

reduction by DiLFM via multiple simulations and experiments, including the _Drosophila_ embryo and brain. We show the robustness of DiLFM in observing zebrafish blood flow at 100 Hz in

low-light conditions. We further demonstrate that our DiLFM enables finding two times more neurons in zebrafish brain in vivo with low-power illumination. RESULTS DILFM SUBSTANTIALLY

SUPPRESSES ARTIFACTS IN LFM Due to the illness of LFM reconstruction, traditional LFM usually generates several kinds of artifacts with RL deconvolutions (Methods and Fig. S1). First, we

find traditional LFM cannot achieve high contrast and ringing-suppressed reconstructions at the same time. We numerically simulate a USAF-1951 resolution target at _z_ = −50 µm and find the

core of the square becomes dimmer and dimmer as more RL iterations are involved (Fig. S2a, b). The intensity cross-section of an originally sharp edge clearly shows a ringing feature, while

the peak-to-valley ratio increasing with the iteration number suggests improved contrast (Fig. S2c–e). We argue that such contrast increase is at the cost of image structure distortions,

which should be alleviated for quantitative analysis. Another well-known artifact of LFM is the block-wise features in NIP due to the insufficient spatial sampling of LFM near NIP (Fig. S3a,

c). Block artifacts simultaneously disturb structure continuity in both lateral and axial axes (Fig. S3d, e). The third kind of image artifact is the defocus artifact among different

layers. We find a sphere at _z_ = −70 µm shows smear ghost with high-frequency grids and blocks even at _z_ = +50 µm after LFM reconstruction, which is very different from a conventional

widefield microscope whose defocus pattern is smooth (Fig. S4a). When there are two spheres in the space, the original well-reconstructed sphere can be contaminated by grid patterns due to

the defocus of other spheres (Fig. S9). When imaging a thick biological volume with LFM, each depth layer generates a _z_-spread defocus pattern that contains high-frequency components

mixing with other depths (Figs. S4b, f–h). The resulting reconstructions will be full of gird-like patterns and have isolated unnatural high-frequency components in the Fourier domain (Fig.

S4c). To address all of the artifacts simultaneously, we propose DiLFM, which includes a few RL iterations to avoid edge ringing and an additional dictionary patching approach to suppress

other artifacts and improve the imaging contrast. The number of RL iterations in this approach is carefully chosen such that beyond that number the ringing artifact appears (Fig. S2a, b,

Table S2). Our DiLFM leverages the domain similarity of different biological samples to assist high-fidelity recovery in LFM (Methods). Compared to traditional LFM, DiLFM achieves the same

contrast as RL deconvolutions with 10 iterations but with significantly reduced edge ringing (Fig. S2f). Meanwhile, DiLFM faithfully recovers the block-like feature of a round bead in both

_x–y_ and _x–z_ planes compared to traditional LFM (Fig. S3b–d). Compared to frequency-domain filtering methods, DiLFM can achieve higher contrast over different depths (Fig. S7). The

grid-like crosstalk artifacts by traditional LFM are suppressed in DiLFM and reconstructed samples become much smoother (Fig. S4d, i–k, Fig. S9b). Such artifact reduction is also confirmed

in the frequency domain since the reconstruction by DiLFM has a more natural and clearer frequency response (Fig. S4e) compared to that by traditional LFM. We further compare our DiLFM with

other emerging LFM methods28,29 through simulation. We see all methods apart from RL deconvolution from traditional LFM suppress the block-like artifacts, while anti-aliasing filter blurs

the sample, and phase space method generates ringing artifacts (Fig. 3a–c). DiLFM achieves the artifact-suppressed reconstruction together with a sharp edge which shows a great balance

between artifact reduction and resolution maintenance. We use an imaging quality metric called structural similarity index (SSIM)31 to quantitatively assess the reconstruction quality. We

find the SSIM by our method is 0.89, which is much higher than 0.81 of phase space approach, 0.69 of RL with an anti-aliasing filter, and 0.65 of RL deconvolution. For objects with gradual

changes in the intensity profiles, our DiLFM also achieves superior reconstruction results compared to other methods (Fig. S8). DILFM IMPROVES FIDELITY IN BIOLOGICAL OBSERVATIONS The

artifact reduction makes LFM more reliable in observing _Drosophila_ embryos and adult _Drosophila_ brains (Fig. 2a and Fig. S5a). We observe that the block-wise artifacts are largely

reduced by DiLFM, and the embryo boundary and brain sulcus are restored to be smooth (Fig. 2d, f, Fig. S5i–k). Embryo cells at NIP that are incorrectly reconstructed into square forms by

traditional LFM are restored to be round by DiLFM (Fig. 2e, g). The frequency response of these structures is restored to be a natural form with reduced periodic artifacts (Fig. 2f and Fig.

S5k). We also observe the grid patterns from depth crosstalk are largely reduced by DiLFM (Fig. 2h, i, Fig. S5f–h). We find the peak-to-valley ratio of a brain sulcus is improved ~1.2 times

at _z_ = 10 µm by DiLFM, underlining the reduction of artifacts in DiLFM does not sacrifice resolution, as compared to previous methods. LFM is widely used in high-speed volumetric recording

tasks due to its scanning-free and low phototoxicity, compared to scanning techniques such as confocal microscopy and two-photon microscopy. We thus demonstrate the superior performances of

DiLFM in zebrafish blood flow imaging in vivo at 100 Hz in 3D (Fig. S6). We find DiLFM achieves reduced background compared to traditional LFM (Fig. S6b, c). Blood cells are with reduced

depth crosstalk in DiLFM such that they can be easily tracked (Fig. S6d, e). High-speed volumetric recording enables us to analyze blood flows in zebrafish larvae by calculating time-lapse

intensity changes through a blood vessel cross-section. Such intensity fluctuations fail to predict blood cell flows in traditional LFM due to low contrast and artifacts (Fig. S6f, h). In

the contrast, DiLFM provides clear reconstructions and suppresses the ambiguity in blood cell counting (Fig. S6g, i, Fig. S11). DILFM ACHIEVES SIGNIFICANT NOISE ROBUSTNESS As a volumetric

imaging tool, LFM shows much lower phototoxicity compared to a confocal microscope since almost all emitted fluorescent photons contribute to the final image without waste. However, the

illness of LFM reconstruction causes severe artifacts in low photon flux conditions. The dominant source of noise in LFM is the shot noise, which can be modeled as a Poisson distribution13,

while the readout noise following Gaussian distribution also contaminates the image. Although traditional LFM reconstruction is derived through Poisson noise, its performance drops quickly

when noise is severe and other types of noise appear. Our DiLFM can intake mixed Poisson and Gaussian noise contamination as a prior during the training process (Methods), and prevent

noise-induced artifacts and resolution degradations. We show DiLFM achieves superior performance under different noise levels compared to other methods in numerical simulations (Fig. 3d–f).

When the noise level is at 23.5 dB peak signal-to-noise ratio (PSNR), we find the DiLFM has clear background while RL and anti-aliasing methods still have noisy pixels remained (Fig. 3d).

DiLFM achieves the least distorted reconstructions of simulated spheres among all methods with the highest SSIM (Fig. 3e). We conduct reconstruction quality assessment through different

noise levels (PSNR ranges from 15.9 dB to 33.2 dB) and find DiLFM achieves the best reconstruction quality across the whole noise range (Fig. 3f). Especially, when PSNR drops below 20 dB,

all other methods show a significant performance drop while our method remains high performance. Similar performance improvement by DiLFM is also observed in samples with gradual changes in

intensity profiles (Fig. S8). The robust performance of DiLFM in noisy conditions fully unleashes the potential of LFM in long-term in vivo observation of living zebrafish, where

illumination heat damage needs to be carefully avoided. To confirm such potentials, we image the zebrafish blood cells (erythrocytes) with only 0.12 \({\mathrm{mWmm}}^{ - 2}\) laser power

compared to 6.8 \({\mathrm{mWmm}}^{ - 2}\) used in previous experiments (Fig. S6) while the imaging rate remains at 100 Hz. We find the traditional LFM reconstruction at _z_ = -30 µm is

noisy with unrecognizable blood vessels and cells due to extremely low laser power (Fig. 4a, c, e). On the other hand, DiLFM restores clear structures with reduced background (Fig. 4b, d,

e). The quality improvement by DiLFM is 3D instead of 2D, confirmed through better resolved hollow-core vessels and elliptical blood cells (Fig. 4d, e). Benefitting from the improved image

quality, we show the DiLFM significantly increases the counting accuracy of flowing blood cells by simply calculating cross-section intensity fluctuations (Fig. 4f, h), compared to

traditional LFM which has a highly noisy baseline and is hard to judge cell flows (Fig. 4f, g). Blood cells reconstructed by DiLFM have much more compact and clearer profiles, which reduces

ambiguity in cell counting (Fig. S10). Finally, we demonstrate DiLFM can achieve better neuron activity inference compared to the traditional LFM method in low-illumination conditions. We

record a HUC: H2B-GCaMP6s larvae zebrafish embedded in 1% agar with 0.37 mW mm−2. We visualize the neuron extraction by plotting the projected standard deviation volume along the temporal

axis in Fig. 5a (Methods). The thick larvae zebrafish head and low illumination power blur the reconstruction by traditional LFM and generate high backgrounds and artifacts, which severely

disturb neuron inference (Fig. 5a). On the other hand, our proposed DiLFM technique obtains sharper images with finer spatial details thanks to the learned dictionary prior. Detectable

artifacts due to noise and low spatial sampling of LFM which covers neurons are absent in DiLFM. We find neurons can be much easier to be recognized through DiLFM compared to traditional LFM

(Fig. 5c). In the temporal domain, traditional LFM only achieves neuron activities with poor Δ_F_/_F_ since the SNR is low, while DiLFM achieves higher activity contrast since the

background is largely suppressed and noise is smoothed through the dictionary patching (Fig. 5d). In our experiment, DiLFM unveils 779 neurons through CNMF-E32 analysis in a range of 800

\(\times\) 600 \(\times\) 100 µm3 volume, compared to 383 neurons by LFM. The spatial distributions of those active neurons, temporal activities, and temporal correlations are plotted in

Fig. 5e-g. The artifacts of traditional LFM prevent CNMF-E from finding neurons near NIP, while neurons found by CNMF-E under the same parameters are uniform along different depths by DiLFM

(Fig. 5h). Higher fidelity of inferring neurons in both spatial and temporal domain of DiLFM makes it superior in volumetric functional imaging (Fig. S12). Compared to other LFM techniques

which require scanning33 or multiview imaging22, DiLFM gives an efficient performance-improving solution without any hardware modifications. DISCUSSION In summary, we have developed DiLFM,

an algorithm-enhanced LFM technique that can substantially reduce reconstruction artifacts and maintain high contrast without any hardware modification even in extremely noisy conditions. To

optimize the performance of the proposed DiLFM, we thoroughly discuss the appearance and mechanism of three different kinds of LFM reconstruction artifacts and intake them all into a

dictionary patching model to correct them. Furthermore, the proposed dictionary patching increases the reconstruction resolution and contrast by supplying high-resolution and high-contrast

information from the training stage. We validate our DiLFM through both simulations and experiments, including imaging _Drosophila_ embryos and _Drosophila_ brain. We further show DiLFM can

increase the cell-counting accuracy of flowing blood cells in zebrafish in vivo even under extremely noisy conditions to keep the animal safe in long-term recordings. In the functional

imaging experiment, we show DiLFM can discover two times more neurons with improved Δ_F_/_F_ and reduced artifacts disturbance with low light dosage. We hope the scheme can help LFM become a

promising and reliable tool for high-speed imaging biological tissues in 3D. The proposed DiLFM achieves superior performance compared to traditional LFM but with full advantages of LFM in

other aspects. For example, the volume acquisition rate of DiLFM is independent of the size of the sample and only limited by camera frame rate, compared to other 3D imaging technology.

Introducing the dictionary only affects the downstream data processing speed without any sacrifice of capturing rate. It is straightforward to extend DiLFM to a larger FOV or a compact

head-mounted LFM27. Furthermore, by introducing photon-scattering models into dictionary priors34, it is possible to exceed the depth-penetration limitation of DiLFM in in vivo mouse-brain

imaging23. Borrowing the thoughts from DiLFM of using the versatile dictionary to adopt different imaging environments, other deconvolution energized volumetric imaging methods22,35 can also

use such a prior for better performance in various applications. MATERIALS AND METHODS DILFM OPTICAL SETUP We set up the light field microscope based on a commercial microscope (Zeiss,

Observer Z1) and use a mercury lamp as the illumination source. We use different objectives for different imaging tasks (see Table S1) with the same \(f\) = 165 mm tube lens. The MLA is put

on the image plane of the microscope. The specification of the MLA we use has a 100 µm pitch size and a 2.1 mm focal length to code the 3D information. We put a relay system between the

camera (Andor Zyla 4.2 Plus, Oxford Instruments) and the MLA, which conjugates the back-pupil plane of the MLA to the sensor plane. The sensor pixel size is 6.5 µm and the magnification of

the relay lens system is set to be 0.845. DILFM PRINCIPLE The proposed DiLFM can be decomposed into two parts: raw reconstructions through a few runs of RL iterations and fine

reconstructions through dictionary patching. In the following sections, we first mathematically represent the RL iteration of LFM, then describe the way that our proposed dictionary patching

fixes these artifacts and improves the reconstruction resolution and contrast. LIGHT-FIELD MICROSCOPY MODEL AND RL DECONVOLUTION A common light-field microscope is composited by a

wide-field microscopy and an MLA put in the native imaging plane, as shown in Fig. 1. We denote the sample space coordinate as \(\left( {x_1,x_2,z} \right)\) and sensor space coordinate as

\(\left( {s_1,s_2} \right)\). The point spread function (PSF) of LFM can be formulated by $$\begin{array}{*{20}{c}} {h\left( {x_1,x_2,z,s_1,s_2} \right) = \left| {\Im _{f_\mu }\left\{

{U\left( {x_1,x_2,z,s_1,s_2} \right){\Phi}\left( {s_1,s_2} \right)} \right\}} \right|^2} \end{array}$$ (1) Here, \(U\left( {x_1,x_2,z,s_1,s_2} \right)\) is the optical field in the NIP

generated by a point source in \(\left( {x_1,x_2,z} \right)\), which is defined by36 $$\begin{array}{l}U\left( {x_1,x_2,z,s_1,s_2} \right)\\\;\;\; = \frac{M}{{f_{obj}^2\lambda

^2}}\exp\left({ - \frac{{iu}}{{4\sin ^2\left({\alpha /2} \right)}}} \right)\mathop {\int}\nolimits_0^\alpha P\left(\theta \right)\exp\left({ - \frac{{iu\sin ^2\left({\theta /2}

\right)}}{{2\sin ^2\left({\alpha /2} \right)}}} \right)J_0\left({\frac{{\sin \left(\theta \right)}}{{\sin \left(\alpha \right)}}v} \right)\sin \left(\theta \right){\mathrm{d}}\theta \\v

\approx k\sqrt {\left({x_1 - s_1} \right)^2 + \left( {x_2 - s_2} \right)^2} \sin \left(\alpha \right) \\{u \approx 4kz\sin ^2\left({\alpha /2} \right)} \end{array}$$ (2) \({\Phi}\left(

{s_1,s_2} \right)\) is the modulation function of the MLA which has pitch size \(d\) and focal length \(f_\mu\) $$\begin{array}{l}{\Phi}\left( {s_1,s_2} \right) = {\iint}

{\mathrm{rect}}\left( {\frac{{t_1}}{d}} \right){\mathrm{rect}}\left( {\frac{{t_2}}{d}} \right)\exp \left( { - \frac{{ik}}{{2f_\mu }}\left( {t_1^2 + t_2^2} \right)} \right)\\comb\left(

{\frac{{s_1 - t_1}}{d}} \right)comb\left( {\frac{{s_2 - t_2}}{d}} \right) dt_1dt_2\end{array}$$ (3) \(\Im _{f_\mu }\left\{ \cdot \right\}\) is the Fresnel propagation operator which carries

a light field as input and propagates a distance \(f_\mu\) along the optical axis. To reconstruct the 3D sample from the captured image, we need to bin the continuous sample and sensor space

for voxelization and pixelization13. LFM can then be modeled as a linear system \(H\) that maps the 3D sample space into 2D sensor space $$\mathop {\sum}\limits_{x_1,x_2,z}

{H\begin{array}{*{20}{c}} {\left( {x_1,x_2,z,s_1,s_2} \right)X\left( {x_1,x_2,z} \right) = Y\left( {s_1,s_2} \right)} \end{array}}$$ (4) Here _Y_ is the discrete sensor image and _X_ is the

3D distribution of the sample. The weight matrix _H_ can be sampled from Eq. (1) which records how the photons emitted from the voxel \((x_1,x_2,z)\) separates and contributes to the pixel

\((s_1,s_2)\). Further, the weight matrix \(H\) could be simplified via periodicity introduced by the MLA, which implies $$\begin{array}{*{20}{c}} {H\left( {x_1,x_2,z,s_1,s_2} \right) =

H\left( {x_1 + D,x_2 + D,z,s_1 + D,s_2 + D} \right)} \end{array}$$ (5) where \(D\) is the pitch of microlens under the unit of pixel size. We simplify Eq. (4) into

\({\mathbf{H}}_{{\mathrm{for}}}\left( X \right) = Y\) to represent the forward projection in LFM. On the other hand, if we trace back each light ray that reaches the sensor, we can rebuild

the sample \(X(x_1,x_2,z)\) via $$\mathop {\sum}\limits_{s_1,s_2}{\frac{{ {H\left( {x_1,x_2,z,s_1,s_2} \right)Y\left( {s_1,s_2} \right)} }}{{\mathop {\sum}\nolimits_{w_1,w_2} {H\left(

{x_1,x_2,z,w_1,w_2} \right)} }} = X\left( {x_1,x_2,z} \right)}$$ (6) We simplify Eq. (6) into \({\mathbf{H}}_{{\mathrm{back}}}\left( Y \right) = X\) to represent the backward projection in

LFM. It is popular to use RL algorithm to refine _X_ from _Y_ and _H_. In each iteration, RL tries to update \(\hat X^{\left( t \right)}\) from the last iteration result \(\hat X^{\left( {t

- 1} \right)}\) via13 $$\begin{array}{*{20}{c}} {\hat X^{\left( t \right)} \leftarrow \hat X^{\left( {t - 1} \right)} \odot {\mathbf{H}}_{{\mathrm{back}}}\left(

{\frac{Y}{{{\mathbf{H}}_{{\mathrm{for}}}\left( {\hat X^{\left( {t - 1} \right)}} \right)}}} \right)} \end{array}$$ (7) where \(\odot\) means element-wise multiplication. We denote the

running Eq. (7) once as one RL iteration. Usually to reconstruct an LFM volume requires multiple RL iterations37. On the other hand, running RL iterations too much will cause severe edge

ringing problems. FIXING ARTIFACTS AND IMPROVE CONTRAST THROUGH DICTIONARY PATCHING In this section, we first show how to learn a dual dictionary pair \(\left(

{{\mathbf{D}}_{l,z},{\mathbf{D}}_{h,z}} \right)\) with LFM model, where \({\mathbf{D}}_{l,z}\) is the collection of most representative elements of raw LFM reconstruction and

\({\mathbf{D}}_{h,z}\) is the collection of corresponding high-fidelity and artifact-reduced elements. The element here means the local features of an image, e.g., corners for edges. We then

show how to apply the learned dictionaries to achieve high-fidelity and artifact-reduced reconstruction from raw RL reconstruction. We prepare a set of high-fidelity and high SNR 3D volume

\(\left\{ {I_j^{ref}} \right\}\) to learn the dictionary prior. For each reference volume \(I_j^{ref}\), we numerically feed it into LFM forward projection built-in Eq. (4) to get an LFM

capture \(Y_j^{ref}\), then use the RL deconvolution in Eq. (7) to get a raw reconstructed volume \(\hat I_j^{ref}\). In this way, we generate a set of high and low-fidelity volume pairs

\(\left\{ {\left( {I_j^{ref},\hat I_j^{ref}} \right)} \right\}\), where the resolution drops and artifacts in \(\hat I_j^{ref}\) are generated through the real LFM model. Since the LFM

artifacts are associated with depth z as discussed in Sec. 2.2, we split the volume pair \(\left\{ {\left( {I_j^{ref},\hat I_j^{ref}} \right)} \right\}\) into different _z_-depth pairs

\(\left\{ {\left( {I_{j,z}^{ref},\hat I_{j,z}^{ref}} \right)} \right\}\) and further generate a patch dataset \({\cal{P}}_z\) regarding _z_-depth for the following training via

$$\begin{array}{*{20}{c}} {{\cal{P}}_z = \left\{ {L_k\left( {I_{j,z}^{ref} - \hat I_{j,z}^{ref}} \right),L_k\left( {F\hat I_{j,z}^{ref}} \right)} \right\} \buildrel \Delta \over = \left\{

{p_h^k,p_l^k} \right\}} \end{array}$$ (8) where \(L_k\left( \cdot \right)\) is the linear image-to-patch mapping so that a \(\sqrt n \times \sqrt n\)-pixel patch can be extracted from an

image and \(k\) is the patch index. Patches are randomly selected from the image with overlapping. Since some biological samples are quite sparse, we select patches with enough signal

intensity to avoid null patches. \(F\) is a feature extraction operator that provides a perceptually meaningful representation of patch38. The common option of \(F\) can be the first- and

second-order gradients of patches. The reason to use \(I_{j,z}^{ref} - \hat I_{j,z}^{ref}\) is to let the later learning process focus on high-frequency information30. We also conduct a

dimensionality reduction through Principal Component Analysis (PCA) algorithm to \(\left\{ {p_l^k} \right\}\) for reducing superfluous computations30. After these preparations, the

low-fidelity dictionary \({\mathbf{D}}_{l,z}\) which is the collection of most representative elements in \(z\)th depth of LFM reconstructed biological tissue can be learned via

$$\begin{array}{l} {{\mathbf{D}}_{l,z},\left\{{\beta ^k} \right\} = {\mathrm{argmin}}\displaystyle\mathop {\sum}\limits_k \Vert p_l^k - {\mathbf{D}}_{l,z}\beta

^{k}\Vert_{2}^{2},{s.t.}\Vert\beta ^{k}\Vert_0 \,\le\, \kappa ,\forall k} \end{array}$$ (9) where \(\Vert\cdot \Vert_2\) is \(\ell _2\) norm which measures the data fidelity, \(\Vert\cdot

\Vert_0\) is the \(\ell _0\) “norm” which measures the sparsity, \(\beta _k\) is the sparse representation coefficients for low-fidelity patch \(p_l^k\), and \(\kappa\) is maximum sparsity

tolerance. Equation (9) could be effectively solved by the well-known K-SVD algorithm39. The corresponding high-fidelity dictionary \({\mathbf{D}}_{h,z}\) is generated by solving the

following quadratic programming (QP) $$\begin{array}{*{20}{c}} {{\mathbf{D}}_{h,z} = {\mathrm{argmin}}\mathop {\sum}\limits_j \left\Vert{I_{j,z}^{ref} - \hat I_{j,z}^{ref}} - \left[ {\mathop

{\sum}\limits_k {L_k^TL_k} } \right]^{ - 1}\left[ {\mathop {\sum}\limits_k {L_k^T{\mathbf{D}}_{h,z}\beta ^k} } \right]\right\Vert_2^2} \end{array}$$ (10) Note the library pair \(\left(

{{\mathbf{D}}_{l,z},{\mathbf{D}}_{h,z}} \right)\) is specific for different \(z\) since the degradation of imaging quality is depth-dependent. Here we assume the high- and low-fidelity

dictionaries share the same sparse representation \(\left\{ {\beta ^k} \right\}\) based on the assumption that artifact contamination and blur operation in LFM reconstructions are

near-linear (Note S1). The NIP artifact is covered by the dictionary learned in the NIP layer. The defocus artifact is also covered since the whole reconstructed volume is learned instead of

only learning single-image reconstructions, as a comparison to the traditional dictionary learning method38. The high-fidelity and artifact-free reference volume \(\left\{ {I_j^{ref}}

\right\}\) are collected from broad bioimage benchmark collection Nos. 021, 027, 032, 03340, and SOCR 3D Cell Morphometry Project Data41. The flowchart of the LFM dictionary learning process

is shown in Fig. S1a. To achieve high-fidelity and artifact-reduced volume \(\tilde X^{(t)}\) from raw RL reconstruction volume \(\hat X^{(t)}\), we run sparse representation for each z

depth of \(\hat X^{(t)}\) with the learned _z_-depth dictionary prior \(\left( {{\mathbf{D}}_{l,z},{\mathbf{D}}_{h,z}} \right)\). Firstly, we estimate the sparse representation of each local

patch of \(\hat X_z^{(t)}\). We extract the local patch from \(\hat X_z^{(t)}\) by the same mapping \(L_k\left( \cdot \right)\) as above with the size of \(\sqrt n \times \sqrt n\)-pixel,

then search a sparse coding vector \(\alpha _z^k\) such that \(L_k\hat X_z^{(t)}\) can be sparsely represented as the weighted summation of a few elements from \({\mathbf{D}}_{l,z}\)

$$\begin{array}{*{20}{c}} {\min\!\Vert \alpha_{z}^k\Vert_0,\qquad {s.t.}\Big\Vert FL_k\hat X_z^{(t)} - {\mathbf{D}}_{l,z}\alpha_{z}^k \Big\Vert_2\, \le\, \in} \end{array}$$ (11) where

\({\it{\epsilon }}\) is the error tolerance. Eq. (11) can be solved via orthogonal matching pursuit (OMP) algorithm42. Secondly, we use the found sparse coefficients \(\alpha _z^k\) to

recover the high-fidelity and artifact-reduced patch \(p_{h,z}^k\) by \(p_{h,z}^k = {\mathbf{D}}_{h,z}\alpha ^k\), then accumulate \(p_{h,z}^k\) to form a high-fidelity image \(\tilde

X_z^{(t)}\) by solving the following minimization problem $$\begin{array}{*{20}{c}} {\tilde X_z^{(t)} = {\mathrm{argmin}}\displaystyle\mathop {\sum}\limits_k \Big\Vert{L_k} \left({\tilde

X_z^{(t)} - \hat X^{\left(t \right)}} \right) - p_{h}^{k}} \Big\Vert_2^2 \end{array}$$ (12) After concatenating \(\tilde X_z^{(t)}\) into the whole volume \(\tilde X^{(t)}\), a high-fidelity

and artifact-reduced volume is recovered from original RL reconstruction \(\hat X^{(t)}\). The flow-chart of the reconstruction processing is shown in Fig. S1b. To choose proper RL

iterations before dictionary patching, one can visually check the RL output. Once there is edge ringing the RL iteration number should be reduced. For samples with uniform intensity

distribution, 1 RL iteration is enough. All RL iteration numbers of experiments in the manuscript can be found in Table S2. DICTIONARY TRAINING WITH NOISE We train the dictionary with mixed

Poisson and Gaussian noise contaminations. The dark noise and the photon noise of fluorescent imaging follow a Poisson distribution while the readout noise follows a Gaussian distribution.

Hence, we choose the mixed Poisson and Gaussian noise to mimic the real situation. The observed image under the microscope thus can be modeled as43 $$\begin{array}{*{20}{c}} {Y = \alpha

{\rm{P}}\left( {\frac{{{ \mathbf{H} }_{{\mathrm{for}}}\left( X \right)}}{\alpha }} \right) + {\mathbb{N}}\left( {0,\sigma ^2} \right)} \end{array}$$ (13) where \(Y\) is observed image,

\({\mathbf{H}}_{{\mathrm{for}}}\) is the forward propagator of LFM, \(X\) is the noise-free sample, \(\alpha\) is the scaling factor that controls the strength of Poisson noise,

\({\mathrm{P}}( \cdot )\) is the realization of Poisson noise, and \({\mathbb{N}}\left( {0,\sigma ^2} \right)\) represents Gaussian noise with 0 mean and \(\sigma ^2\) variance. We fix

\(\sigma ^2\) to be ~200 for 16-bit sCMOS image, and varying \(\alpha\) to generate captures with the different noise levels. The high-fidelity and artifact-free reference volume \(\left\{

{I_j^{ref}} \right\}\) are firstly propagated to the sensor plane, then added Poisson and Gaussian noise with MATLAB function imnoise to form \(\left\{ {\hat I_j^{ref}} \right\}\). Then,

noise aware dictionary is learned through Eqs. (9) and (10). \(\left\{ {\hat I_j^{ref}} \right\}\) contains multiple levels of noise to accommodate different SNR conditions. Trained low- and

high-fidelity dictionaries have different element numbers and patch sizes to accommodate different modalities, see Table S2. SAMPLE PREPARATION DROSOPHILA EMBRYO IMAGING The _Drosophila_

embryo used in this study (Fig. 2) expressed histone tagged with EGFP (w; His2Av::eGFP; Bloomington stock #23560). The embryos were collected by putting adult flies on a grape-juice agar

plate for 45 min–1 h. After incubation at 25 °C for 1 h, the embryos were attached to a glass slide with double-sided tape. We use forceps to carefully roll an embryo on the tape until the

embryo dechorionated. The Dechorionated embryos were embedded in 2% low-melting-temperature agarose in a Glass Bottom Dish (35 mm Dish with 20 mm Bottom Well, Cellvis). We put the Glass

Bottom Dish on the microscope stage and scan the embryo along the _z_-axis 4 times with a 30 µm stride, then concatenate 4 reconstructed stacks to form the volume. DROSOPHILA BRAIN IMAGING

The _Drosophila_ Adult Brain (w1118) used in this study (Fig. S5) was dissected at 4–5 days after eclosion in phosphate buffer saline (PBS) and fixed with 4% paraformaldehyde in PBST (PBS

with 0.3%Triton X-100) for 30 min. After washing in PBST, the brain was blocked in 5% normal mouse serum in PBST for 2 h in RT (room temperature) and then immunostained using commercial

antibodies. The brain was incubated in primary antibodies (Mouse anti nc82, 1:20, Hybridoma Bank) and secondary antibodies (Goat anti-mouse Alexa-488,1:200, Invitrogen) for 48–72 h at 4 °C,

with a 2 h wash at 4 °C between the primary and secondary antibody incubations. After that, the brain was washed 3–4 times in PBST. The brain is cut into ~60 µm thickness slices. The slice

was mounted and was further observed by the LFM in epifluorescence mode. No concatenation is made. No further deconvolution is applied. ZEBRAFISH BLOOD CELL IMAGING Zebrafish from the

transgenic line Tg(gata1:DsRed) were used in this study for blood cell imaging (Fig. 4, Fig. S6). For two-color recordings (Fig. 4), zebrafish from the transgenic line Tg(gata1:DsRed) were

crossed with zebrafish from the transgenic line Tg(flk: EGFP). The embryos were raised at 28.5 °C until 4 dpf. Larval zebrafish were paralyzed by short immersion in 1\(mgml^{ - 1}\)

\({\upalpha}\)-bungarotoxin solution (Invitrogen). After paralyzed, the larval were embedded in 1% low-melting-temperature agarose in a Glass Bottom Dish (35 mm Dish with 20 mm Bottom Well,

Cellvis). We maintained the specimen at room temperature and imaged the zebrafish larval at 100 Hz. ZEBRAFISH FUNCTIONAL IMAGING Zebrafish from the transgenic line Tg(HUC: GCaMP6s)

expressing the calcium indicator GCaMP6s was raised at 28.5°C until 4 dpf for short-term functional imaging (Fig. 1b and Fig. 5). Larval zebrafish were paralyzed by short immersion in 1 mg

ml−1 \({\upalpha}\)-bungarotoxin solution (Invitrogen). After paralyzed, the larval were embedded in 1% low-melting-temperature agarose in a Glass Bottom Dish (35 mm Dish with 20 mm Bottom

Well, Cellvis). For imaging, the dorsal side of the head of the larval zebrafish was facing the objective. We maintained the specimen at room temperature and imaged the zebrafish larval at 1

Hz. Assume the reconstructed volume by DiLFM is \(\tilde X(x,y,z,t)\) where \(\left( {x,y,z} \right)\) is the 3D spatial coordinate of the voxel and \(t\) labels the time, the temporal

summarized volume was calculated through the following procedures. In the first step, we calculate the rank-1 background components of \(\tilde X(x,y,z,t)\) via $$\begin{array}{*{20}{c}}

{\left[ {b,f} \right] = \arg \mathop {{\min }}\limits_{b,f} \displaystyle\mathop{\sum}\limits_t \left\Vert{\tilde X(x,y,z,t) - b(x,y,z)} \cdot f\left( t \right)\right\Vert_2^2} \end{array}$$

(14) where \(b(x,y,z)\) is the spatial background and \(f\left( t \right)\) is the temporal background. \(b\) and \(f\) can be calculated through normal non-negative matrix factorization

techniques44. The background-subtracted image is then calculated by \(\tilde X_1(x,y,z,t) = \tilde X(x,y,z,t) - b(x,y,z) \cdot f\left( t \right)\). Then, we calculate the standard deviation

volume of all the background-subtracted volumes across the time domain via $$\begin{array}{*{20}{c}} {\tilde X_2\left( {x,y,z} \right) = \sqrt {\frac{{\mathop {\sum}\nolimits_t {\left(

{\tilde X_1\left( {x,y,z,t} \right) - \frac{{\mathop {\sum}\nolimits_s {\tilde X_1\left( {x,y,z,s} \right)} }}{T}} \right)} ^2}}{T}} } \end{array}$$ (15) where \(T\) is the total frame

number. In Fig. 5a, we plot the maximum intensity projections of \(\tilde X_2\left( {x,y,z} \right)\) along \(x\)-, \(y\)-, and \(z\)-axis to show fired neuron distributions in zebrafish

larvae. All captured frames are used for the above calculation. DATA AVAILABILITY The training dataset for _Drosophila_ embryo, _Drosophila_ brain, zebrafish blood flow, and zebrafish brain

imaging experiments are available at https://drive.google.com/drive/folders/1DzYc6NfO1O_jx314Op4ggvV7LT4BTrzB?usp=sharing. Scripts that are used for fluorescent beads simulation can be found

in https://github.com/yuanlong-o/DiLFM. The pre-trained dictionary for _Drosophila_ embryo imaging and the corresponding DiLFM results are available at https://github.com/yuanlong-o/DiLFM.

CODE AVAILABILITY Our MATLAB implementation of DiLFM is available at https://github.com/yuanlong-o/DiLFM. REFERENCES * Villette, V. et al. Ultrafast two-photon imaging of a high-gain voltage

indicator in awake behaving mice. _Cell_ 179, 1590–1608 (2019). e23. Article Google Scholar * Dana, H. et al. High-performance calcium sensors for imaging activity in neuronal populations

and microcompartments. _Nat. Methods_ 16, 649–657 (2019). Article Google Scholar * Voleti, V. et al. Real-time volumetric microscopy of in vivo dynamics and large-scale samples with SCAPE

2.0. _Nat. Methods_ 16, 1054–1062 (2019). Article Google Scholar * McDole, K. et al. _In toto_ imaging and reconstruction of post-implantation mouse development at the single-cell level.

_Cell_ 175, 859–876 (2018). e33. Article Google Scholar * Dey, N. et al. Richardson-Lucy algorithm with total variation regularization for 3D confocal microscope deconvolution. _Microsc.

Res. Tech._ 69, 260–266 (2006). Article Google Scholar * McNally, J. G. et al. Three-dimensional imaging by deconvolution microscopy. _Methods_ 19, 373–385 (1999). Article Google Scholar

* Xu, C. et al. Multiphoton fluorescence excitation: new spectral windows for biological nonlinear microscopy. _Proc. Natl Acad. Sci. USA_ 93, 10763–10768 (1996). Article ADS Google

Scholar * Keller, P. J. et al. Fast, high-contrast imaging of animal development with scanned light sheet-based structured-illumination microscopy. _Nat. Methods_ 7, 637–642 (2010). Article

Google Scholar * Gustafsson, M. G. L. Nonlinear structured-illumination microscopy: wide-field fluorescence imaging with theoretically unlimited resolution. _Proc. Natl Acad. Sci. USA_

102, 13081–13086 (2005). Article ADS Google Scholar * Bewersdorf, J., Pick, R. & Hell, S. W. Multifocal multiphoton microscopy. _Opt. Lett._ 23, 655–657 (1998). Article ADS Google

Scholar * Prevedel, R. et al. Fast volumetric calcium imaging across multiple cortical layers using sculpted light. _Nat. Methods_ 13, 1021–1028 (2016). Article Google Scholar * Salomé,

R. et al. Ultrafast random-access scanning in two-photon microscopy using acousto-optic deflectors. _J. Neurosci. Methods_ 154, 161–174 (2006). Article Google Scholar * Broxton, M. et al.

Wave optics theory and 3-D deconvolution for the light field microscope. _Opt. Express_ 21, 25418–25439 (2013). ADS Google Scholar * Prevedel, R. et al. Simultaneous whole-animal 3D

imaging of neuronal activity using light-field microscopy. _Nat. Methods_ 11, 727–730 (2014). Article Google Scholar * Levoy, M. et al. Light field microscopy. _ACM Trans. Graph._ 25,

924–934 (2006). Article Google Scholar * Lin, X. et al. Camera array based light field microscopy. _Biomed. Opt. Express_ 6, 3179–3189 (2015). Article Google Scholar * Cong, L. et al.

Rapid whole brain imaging of neural activity in freely behaving larval zebrafish (_Danio rerio_). _eLife_ 6, e28158 (2017). Article Google Scholar * Li, H. Y. et al. Fast, volumetric

live-cell imaging using high-resolution light-field microscopy. _Biomed. Opt. Express_ 10, 29–49 (2019). Article Google Scholar * Guo, C. L. et al. Fourier light-field microscopy. _Opt.

Express_ 27, 25573–25594 (2019). Article ADS Google Scholar * Richardson, W. H. Bayesian-based iterative method of image restoration. _J. Opt. Soc. Am._ 62, 55–59 (1972). Article ADS

Google Scholar * Lucy, L. B. An iterative technique for the rectification of observed distributions. _Astron. J._ 79, 745 (1974). Article ADS Google Scholar * Wagner, N. et al.

Instantaneous isotropic volumetric imaging of fast biological processes. _Nat. Methods_ 16, 497–500 (2019). Article Google Scholar * Nöbauer, T. et al. Video rate volumetric Ca2+ imaging

across cortex using seeded iterative demixing (SID) microscopy. _Nat. Methods_ 14, 811–818 (2017). Article Google Scholar * Pégard, N. C. et al. Compressive light-field microscopy for 3D

neural activity recording. _Optica_ 3, 517–524 (2016). Article ADS Google Scholar * Cohen, N. et al. Enhancing the performance of the light field microscope using wavefront coding. _Opt.

Express_ 22, 24817–24839 (2014). Article ADS Google Scholar * Wu, J. M. et al. Iterative tomography with digital adaptive optics permits hour-long intravital observation of 3D subcellular

dynamics at millisecond scale. _Cell_ (2021). * Skocek, O. et al. High-speed volumetric imaging of neuronal activity in freely moving rodents. _Nat. Methods_ 15, 429–432 (2018). Article

Google Scholar * Stefanoiu, A. et al. Artifact-free deconvolution in light field microscopy. _Opt. Express_ 27, 31644–31666 (2019). Article ADS Google Scholar * Lu, Z. et al. Phase-space

deconvolution for light field microscopy. _Opt. Express_ 27, 18131–18145 (2019). Article ADS Google Scholar * Zeyde, R., Elad, M. & Protter, M. On single image scale-up using

sparse-representations. in _Proc. 7th International Conference on Curves and Surfaces_. (Springer, Avignon, 2012). * Wang, Z. et al. Image quality assessment: from error visibility to

structural similarity. _IEEE Trans. Image Process._ 13, 600–612 (2004). Article ADS Google Scholar * Zhou, P. C. et al. Efficient and accurate extraction of in vivo calcium signals from

microendoscopic video data. _eLife_ 7, e28728 (2018). Article Google Scholar * Zhang, Z. K. et al. Imaging volumetric dynamics at high speed in mouse and zebrafish brain with confocal

light field microscopy. _Nat. Biotechnol._ 39, 74–83 (2021). Article Google Scholar * Liu, H. Y. et al. 3D imaging in volumetric scattering media using phase-space measurements. _Opt.

Express_ 23, 14461–14471 (2015). Article ADS Google Scholar * Guo, C. L., Liu, W. H. & Jia, S. Fourier-domain light-field microscopy. _Biophotonics Congress: Optics in the Life

Sciences Congress_ _2019_. (OSA, Tucson, 2019). * Gu, M. _Advanced Optical Imaging Theory_. (Springer, Berlin, 2000). * Zheng, W. et al. Adaptive optics improves multiphoton super-resolution

imaging. _Nat. Methods_ 14, 869–872 (2017). Article Google Scholar * Yang, J. C. et al. Image super-resolution via sparse representation. _IEEE Trans. Image Process._ 19, 2861–2873

(2010). Article ADS MathSciNet Google Scholar * Aharon, M., Elad, M. & Bruckstein, A. K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. _IEEE

Trans. Signal Process._ 54, 4311–4322 (2006). Article ADS Google Scholar * Ljosa, V., Sokolnicki, K. L. & Carpenter, A. E. Annotated high-throughput microscopy image sets for

validation. _Nat. Methods_ 9, 637 (2012). Article Google Scholar * Kalinin, A. A. et al. 3D cell nuclear morphology: microscopy imaging dataset and voxel-based morphometry classification

results. in _Proc. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops_. (IEEE, Salt Lake City, 2018). * Pati, Y. C., Rezaiifar, R. & Krishnaprasad, P. S.

Orthogonal matching pursuit: recursive function approximation with applications to wavelet decomposition. in _Proc. 27th Asilomar Conference on Signals, Systems and Computers_. (IEEE,

Pacific Grove, 1993). * Rasal, T. et al. Mixed poisson gaussian noise reduction in fluorescence microscopy images using modified structure of wavelet transform. _IET Image Process._ 15,

1383–1398 (2021). Article Google Scholar * Pnevmatikakis, E. A. et al. Simultaneous denoising, deconvolution, and demixing of calcium imaging data. _Neuron_ 89, 285–299 (2016). Article

Google Scholar Download references ACKNOWLEDGEMENTS The authors were supported by the National Natural Science Foundation of China (62088102, 62071272, and 61927802), the National Key

Research and Development Program of China (2020AAA0130000), the Postdoctoral Science Foundation of China (2019M660644), and the Tsinghua University Initiative Scientific Research Program.

The authors were also supported by the Beijing Laboratory of Brain and Cognitive Intelligence, Beijing Municipal Education Commission. J.W. was also funded by the National Postdoctoral

Program for Innovative Talent and Shuimu Tsinghua Scholar Program. The authors would like to acknowledge Xuemei Hu from Nanjing University for helpful discussions about reconstruction

algorithm; Menghua Wu from Tsinghua University for His2Av::eGFP _Drosophila_ stocks; Yinjun Jia from Tsinghua University for _Drosophila_ Adult brain; Dong Jiang from Tsinghua University for

Tg(gata1:DsRed) zebrafish stocks; Zheng Jiang from Tsinghua University for Tg(HUC: GCaMP6s) zebrafish stocks. AUTHOR INFORMATION Author notes * These authors contributed equally: Yuanlong

Zhang, Bo Xiong AUTHORS AND AFFILIATIONS * Department of Automation, Tsinghua University, Beijing, 100084, China Yuanlong Zhang, Bo Xiong, Yi Zhang, Zhi Lu, Jiamin Wu & Qionghai Dai *

Institute for Brain and Cognitive Sciences, Tsinghua University, Beijing, 100084, China Yuanlong Zhang, Bo Xiong, Yi Zhang, Zhi Lu, Jiamin Wu & Qionghai Dai * Beijing National Research

Center for Information Science and Technology, Tsinghua University, Beijing, 100084, China Yuanlong Zhang, Bo Xiong, Yi Zhang, Zhi Lu, Jiamin Wu & Qionghai Dai Authors * Yuanlong Zhang

View author publications You can also search for this author inPubMed Google Scholar * Bo Xiong View author publications You can also search for this author inPubMed Google Scholar * Yi

Zhang View author publications You can also search for this author inPubMed Google Scholar * Zhi Lu View author publications You can also search for this author inPubMed Google Scholar *

Jiamin Wu View author publications You can also search for this author inPubMed Google Scholar * Qionghai Dai View author publications You can also search for this author inPubMed Google

Scholar CONTRIBUTIONS Q.D., J.W., and Y.Z. conceived this project. Q.D. supervised this research. Y.Z. designed DiLFM algorithm implementations and conducted numerical simulations. B.X.,

Z.L., and Yi Z. designed and set up the imaging system. B.X. captured experimental data for Drosophila brain, Drosophila embryo, and Zebrafish blood flow. Y.Z. and B.X. captured experimental

data for Zebrafish calcium imaging. Y.Z. and B.X. processed the data. All authors participated in the writing of the paper. CORRESPONDING AUTHORS Correspondence to Jiamin Wu or Qionghai

Dai. ETHICS DECLARATIONS COMPETING INTERESTS The authors declare no competing interests. SUPPLEMENTARY INFORMATION SUPPLEMENT FIGURES AND TABLES RIGHTS AND PERMISSIONS OPEN ACCESS This

article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as

you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party

material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s

Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/. Reprints and permissions ABOUT THIS ARTICLE CITE THIS ARTICLE Zhang, Y., Xiong, B., Zhang, Y. _et al._

DiLFM: an artifact-suppressed and noise-robust light-field microscopy through dictionary learning. _Light Sci Appl_ 10, 152 (2021). https://doi.org/10.1038/s41377-021-00587-6 Download

citation * Received: 05 December 2020 * Revised: 04 June 2021 * Accepted: 02 July 2021 * Published: 27 July 2021 * DOI: https://doi.org/10.1038/s41377-021-00587-6 SHARE THIS ARTICLE Anyone

you share the following link with will be able to read this content: Get shareable link Sorry, a shareable link is not currently available for this article. Copy to clipboard Provided by the

Springer Nature SharedIt content-sharing initiative

Trending News

Jonathan majors’ former girlfriend granted full temporary order of protectionby CEDRIC 'BIG CED' THORNTON April 28, 2023 ------------------------- In the latest development of the domesti...

Legendary soul singer mavis staples can still nae naeBy Stuart Miller Newsweek AI is in beta. Translations may contain inaccuracies—please refer to the original content. Rea...

Ai-assisted detection of lymph node metastases safely reduces costs and timeOur non-randomized single-center clinical trial demonstrates the safety, cost-saving and time-saving potential of artifi...

Gwyneth paltrow took the stand for over 2 hours in her deer valley ski collision trial. Here's what she said.Gwyneth Paltrow ski collision trial: What she said on the stand Close Modal BOSTON.COM NEWSLETTER SIGNUP BOSTON.COM LOGO...

Breaking down banners: analytical approaches to determining the materials of painted bannersABSTRACT BACKGROUND This paper investigates a range of analytical techniques to yield information about the materials an...

Latests News

Dilfm: an artifact-suppressed and noise-robust light-field microscopy through dictionary learningABSTRACT Light field microscopy (LFM) has been widely used for recording 3D biological dynamics at camera frame rate. Ho...

On the reliability of power measurements in the terahertz bandIn order for terahertz devices to reach technological maturity, robust characterization methods and reliable metrics for...

The page you were looking for doesn't exist.You may have mistyped the address or the page may have moved.By proceeding, you agree to our Terms & Conditions and our ...

How to better your golf game after age 50 | members onlyMemorial Day Sale! Join AARP for just $11 per year with a 5-year membership Join now and get a FREE gift. Expires 6/4 G...

What do your financial adviser's credentials mean?Memorial Day Sale! Join AARP for just $11 per year with a 5-year membership Join now and get a FREE gift. Expires 6/4 G...